Yes. I noticed the slightest hint of sarcasm in the title of this post.

Yes. I noticed the slightest hint of sarcasm in the title of this post.

This post covers four commonly used measurement techniques that 9 times out of 10 work against the evolution of Reporting Squirrels into Analysis Ninjas.

I'll also admit that most of the times when I encounter them I might think slightly less of you (especially if you present the aggregate version to me rather, presenting the segmented view atleast gets you time to explain :)).

If I am being slightly tough minded here it is only because I am hugely upset by the fact that analytics on the web is deeply under leveraged, though the good lord knows we try and pump out KPI's by the minute.

One root cause of this under leveraging it our dashboards that are crammed full of metrics that use these four measurement techniques. The end results: Data pukeing and not insights revelation.

So who are the four amigos?

Each a technique that when used "as normal" actively hinder your ability to communicate effectively the insights that your data contains.

Only one caveat: I am not saying these techniques are evil. What I am saying is don't be "default" when using them, be smart (or don't).

Before we get going here's my definition of what a Key Performance Indicator is:

Measures that help you understand how you are doing against your objectives.

Note the stress on Measures. And Objectives. It it doesn't meet Both criteria its not a KPI.

With that out of the way lets understand why Averages, Percentages, Ratios and Compound Metrics are four usually disappointing measurement techniques.

Raise your hand if you are average? Ok just Ray? No one else?

Raise your had if your visit on any website reflects an average visit? Just you Kristen?

No one is "average" and no user experience is "average". But Averages are everywhere because: 1) well they are everywhere, which feeds the cycle and 2) they are an easy way to aggregate (roll up) information so that others can see it more easily.

Sadly seeing it more easily does not mean we actually understand and can identify insights.

Take a look at the number above.

51 seconds.

Ok you know something.

Now what?

Are you any wiser? Do you know any better what to do next? Any brilliant insights?

No.

It is likely that the Average Time on Site number for your website has been essentially unchanged for a year (and yet, yes sirrie bob, it is still on your "Global Senior Website Management Health Dashboard"!).

Averages have an astonishing capacity to give your "average" data, they have a great capacity to lie, and they hinder decision making. [You are going to disagree, quite ok, please share feedback via comments.]

What can you do?

I have two recommendations for you to consider.

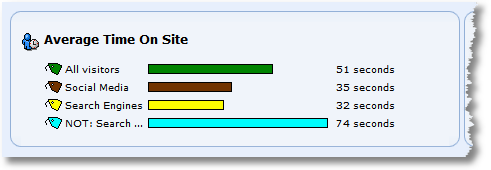

Uno. Segment the data.

Identify your most important / interesting segments for your business and report those along with the Overall averages.

You have more context. Social Media boo! Paid Search booer! Organic yea! Email yea! Etc Etc Etc. : )

While this is not the most optimal outcome, it will at the very minimum give your Decision Makers context within which to ask questions, to think more clearly (and mostly wonderfully ignore the overall average number).

So on your dashboards and email reports make sure that the Key Performance Indicators that use Averages as the measurement technique are shown segmented. It will prod questions. A good thing, as Martha would say.

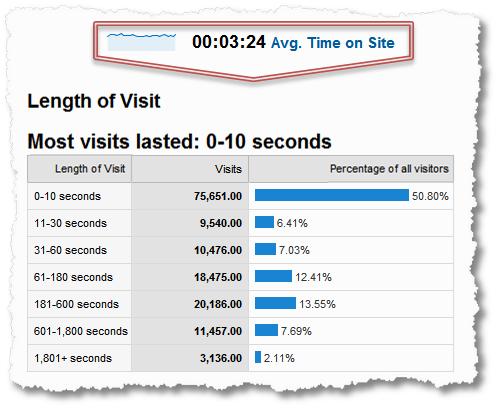

Dos. Distributions baby, distributions!

If averages often (*not always*) stink then distributions rock.

They are a wonderful way to dissect what makes up the average and look at the numbers in a much more manageable way.

Here's how I like looking at time on site. . . .

So delightful.

I can understand the short visits (most!) and decide what to do (ignore 'em, focus hard core, etc).

I can see there is deep loyalty, about 30%, I can decide what these people like, what they don't like, where they come from, what else I can do. [Would you have imagined from the Average Time on Site that you have fanatics on your site who are spending more than 10% on each visit!!]

I can try to take care of the midriff, what is up with that any way.

See what I mean? The difference between the two: Reporting Squirrel vs. Analysis Ninja!

Nothing, really nothing, is perhaps more ubiquitous in our world of Web Analytics than percentages.

You can't take a step without bumping into one.

Some percentages are ok, but very very rarely are they good at answering the "so what" or the "now what" questions.

The problem with percentages is that they gloss over what's really important and also tend to oversell or under sell the opportunity.

Let's compare two pictures. In the first one we just report conversion rates, see what you can understand in terms of insights fro this one. . . . .

Now try to answer the question: So What?

Any answers?

Yes some conversions are lower and others are higher? Anything else? Nope?

Ok try this one. . . .

Better right?

You get context. The raw numbers give you key context around performance.

[Update: I use this plugin to get raw conversion rate numbers into Google Analytics: Better Google Analytics Firefox Extension. I highly recommend it, you get the above and a bunch more really cool stuff. Must have for GA users.]

Also notice another thing, I'll touch on this in a bit as well. If you only report overall conversion rate (as we all do in our dashboards) your use of a percentage KPI is much worse. You get nothing.

By showing the various "segments" of conversions I am actually telling the story much better to the Sr. Management. What's working, what needs work.

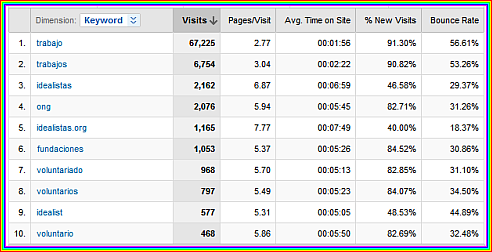

Here's another constant problem with conversion rates. . . .

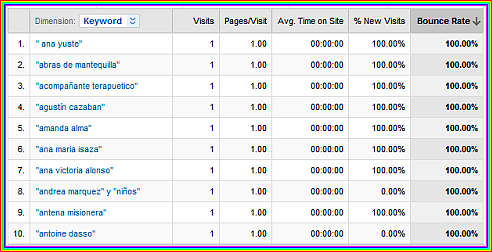

I am looking at a table of data (in any tool really) and it looks like there's something here.

Ok well I want to fix things. I want to know where I can improve bounce rates, so I sort. . . .

Data yes. Totally useless. I can't possibly waste my time with things that bring one visit.

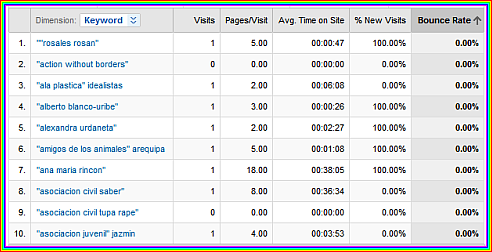

So I re sort to see if I can find where its totally working for me. . . .

Strike three, again not very useful, just take a peek at the Visits column.

What I really want is not where the percents are high or low. I want to take action.

What I really really want is some way of identifying statistically significant data, where bounce rates are "meaningfully up" or "meaningfully down" so that I can take action confidently.

I can't do that in Google Analytics. Quite sad.

Some other tools like Coremetrics (by default) and WebTrends (in some places by default or with a external "plugin" you can buy from external consultants) will compute a %delta (difference between two numbers) and color it red or green.

That's not what I am taking about.

That is equally useless because that percentage difference make you take action where there is no significance in the two numbers. Don't fall for that.

It is truly a crying shame that the Google Analytics does not have something like the Google Website Optimizer does. . . .

. . . . a trigger for me to know when results are statistically significant, and by how much I should jump for joy or how many hairs I need to pull out of my hair in frustration. See those sweet colored bars in the middle? See the second column after that? Minorly orgasmic right?

Isn't it amazing that after 15 years web analytics tools are still not smart, even though they have so much data and computations. Ironic if you think about it.

What can you do?

I have three recommendations for you to consider.

Uno. Segment the data.

Wait, did I not say that already? : )

Do it.

Useless. . . .

Useful. . . .

Show opportunities, show failures, let the questions comes.

Dos. Always show raw numbers.

Often conversion rates mask the opportunity available.

Conversion rate from Live is 15% and conversion rates for Yahoo! are 3%.

Misleading.

We all know that Yahoo! has significantly more inventory than Live and even if you had all the money in the world you can't make use of that 15% conversion rate from Live.

Show raw Visits. It will look something like this:

See what difference that would make on a dashboard? No false alarms.

You overcome the limitation of just showing the percentage.

In the example above I am using Visits, because I want to show the HiPPO's where the constraints are (without them having to think, thus earning my Ninja credentials!). But I am most fond of using Outcomes when I pair up raw numbers (Orders, Average Order Value, Distribution of Time, Task Completion Rates, etc etc) because HiPPO's love Outcomes.

Tres. Don't use % delta! User Statistical Significance et al.

When you use percentages it is often very hard to discern what is important, what is attention worthy, what is noise and what is completely insignificant.

Be very aware of it and use sophisticated analysis to identify for your Sr. Management (and yourself!) what is worthy.

Use Statistical Significance, it truly is your BFF!

Use Statistical Control Limits, they help you identify when you should jump and when you should stay still (so vital!).

This is all truly sexy cool fun, trust me.

Can I be honest with you?

[Ok so I can hear your sarcastic voice saying: "Why stop now?" ;)]

Ratios have a incredible capacity to make you look silly (or even "dumb").

I say that with love.

What's a ratio?

"The relative magnitudes of two quantities (usually expressed as a quotient)." (Wordnetweb, Princeton.)

That was easy. : )

In real life you have see ratio's expressed as 1.4 or as 4:2 or other such variations.

You are comparing two numbers with the desire to provide insights.

So let's say the ratio between new and returning visitors. Or the ratio of friend requests sent on Facebook to friend request received. Or the ratio of articles submitted on a tech support websites to the articles read. Or… make your own.

They abound in our life. But they come with challenges.

The first challenge to be careful of is that the two underlying numbers could shift dramatically without any impact on your ratio (then you my friend are in a, shall we say, pickle). . . .

I have put my "brilliant" excel skills to demonstrate that point. In your dashboard you'll how the ratio (all "green" for four months). Yet the fundamentals, which is really what your Sr. Management is trying to get at, have changed dramatically, perhaps worth an investigation, yet they'll get overlooked.

I hear you protesting all the way from Spain, "aw come one, you have got to be kidding me!". I kid you not.

Think of all the effort you have put into automating the dashboard and cramming all the data into it. Ahh… you've stuffed it with percentages and ratios to make it fit. And you've automated it to boot.

Casualty? Insights. Actionability.

Casualty? Insights. Actionability.

The second problem with ratios is a nuance on the above. It is perhaps more insidious. It occurs when you compare two campaigns or sources or people or other such uniquely valuable things.

I see it manifested by a HiPPO / Consultant / Vendor Serviceman foisting upon you that 1.2 is a "good ratio".

Then you start measuring and people start gaming the system. Because you see 12/10 gets you that ratio as does 12,000/10,000. Yet they both get "rated" the same and that as you'll agree is dumb.

What can you do?

I have two recommendations for you to consider.

Uno. Resist just showing the ratio.

Throw in a raw number, throw in some other type of context and you are on your way to sharing something that will highlight a important facet, prod good questions.

Enough said.

Dos. Resist the temptation to set "golden" rules of thumb.

This is very hard to pull off, we all want to take the easy way out.

But doing this will mean you'll incent the wrong behavior, hinder any thought about what's actually good or bad.

You can a ratio as a KPI, but incent the underlying thing of value. For example Reach and not the ratio of Visits to Subscribers (!!).

Compound metrics produce an unrecognizable paste after mixing a bunch of, perhaps perfectly good, things.

All kidding aside compound metrics are all around us. Most Government data tends to be compound metrics (is it a wonder that we understand nothing that the government does?).

A compound metric is a metric whose sub components are other metrics (or it is defined in terms of other computations).

Here's an example:

(% of New Visits) times (Average Page Views per Visit) equals, making something up here, Visit Depth Index.

What?

Yes what indeed.

The environments where compound metrics thrive are ones where things are really really hard to measure (so we react by adding and multiplying lots of things) or when confidence in our ability to drive action overtakes reality.

Honestly no matter what the outcome is here (or how much of a "god's gift to humanity" it is) how can you possibly do anything with this:

Website Awesomeness= (RT*G)+(T/Q)+((z^x)-(a/k)*100)

(If you don't know what those alphabets stand for just make something up.)

Compound metrics might be important, after all the Government users them, but they have two corrosive problems:

1) When you spit a number out, say 9 or 58 or 1346, no one,except you has any idea what it means (so a huge anti actionability bias) and worse

2) You have no way of knowing if it is good or bad or if you should do something. You can easily see how a raise in some numbers and fall in others could cause nothing to happen. Or all hell could break loose and yet you still get 9. Or 58. Or 1346.

What can you do?

I have three recommendations for you to consider.

Uno. Take them with a grain of salt (or a truck full of salt).

Really.

Regardless of if it comes from me or President Obama or [insert the name of your favorite religious deity here].

Stress test how you'll overcome the two challenges above. If your compound metric passes those tests you are all set.

Dos. Degrade to key "critical few" components.

Grinding RT and G and T and Q and z and x and a and k into a mush is the problem. Not RT or G or T or Q or z or x or a or k themselves.

Spend some time with your HiPPO's and Marketers and people who pay your salary. Try to understand what is the business really trying to solve for. Put the nose to the grind stone and so some hard work.

At the end of this process, as you decompose the individual components, what you'll realize is that all you need is RT and Q and G. Report them.

No not as a weird married "couple". As individuals.

Everyone will know what you are doing, you help the business and your dashboard focus, drive action.

Tres. Revisit and revalidate.

If you must use compound metrics please revisit them from time to time to see if they are adding value. Also check that they are adding value in all the applicable scenarios

If you are using weights, as many compound metrics tend to do, then please please stress test to ensure the weights are relevant to you. Also revalidate the weights over time to ensure you don't have to compensate for seasonality or other important business nuances.

End of story.

I'll close There are two schools of thought about Analtyics.

One is that math is easy so let's go add, subtract, multiply and divide because calculators, computers and data are easily available.

This is the "Reporting Squirrel" mental model, data above all else.

The other is that your entire existence is geared towards driving action. So think, stress test, be smart about the math you do. Computers and calculators are cheap but it does not excuse doing the things outlined above.

This is the "Analysis Ninja" mental model, insights above all else.

Good luck!

Ok now its your turn. What do You think of these four measurement techniques? Agree with my point of view? Why? Why not? Care to share your own bruises from the wonderful world of Web Analytics Key Performance Indicators? Got questions?

Please share your feedback. Thank you.

PS:

Couple other related posts you might find interesting:

Via

Via

Do you have sixth sense?

We're currently reviewing all our KPIs and dashboards so will be making good use of your priceless article!

Would one effective use of percentages not be when looking at 'percentage change over time'?

eg: top X fast moving internal search terms [week on week by % change]

Excelente enfoque le has dado a este post la verdad… un trabajo muy bueno!

Analytics cada vez me resulta mas y mas interezante como herramienta, pero es complicado amprender a interpretar la informacion que brinda en un nivel mas profundo.

Lo estoy utilizando para blogger ahor,a y cree un pequeño tutorial explicando como instalarlo. Espero que sea de utilidad para tus visitantes:

http://www.lawebdejuan.com.ar/2009/02/google-analytics-en-blog-de-blogger.html

Saludos! Y que siga el buen trabajo

Statistical Significance! I love that too! However I can't seem to find any tool providing that type of functionality. In SiteCatalyst, there is a nice alert function, but I have to set the threshold manually just be looking at the average level of the KPI. (oh, did I say average?). Wouldn't it be nice for these web analytics vendors build in some sort of, at least the simplest statistical significance calculations into the tool?

Thank you, Avinash, for another wonderful, thoughtful, delightful, beautiful, …ful post! (I am running out of my vocabulary :) )

Ed

Avinash,

What a wonderful post! Very relevant to my work in the custom survey research world. I particularly like your discussion of statistical significance. My particular pet peeve is the word "directionally". Basically means there's no significant difference between two numbers, but it looks good for the client. I find that if we focus our analysis on truly significant differences (both statistical AND meaningful), we get a much more focused – and actionable – story for our clients.

Thank you!

Mike

Hi Avinash: some comments I have today:

1. I agree with the unfortunate fact that Google Analytics reports are not more like GWO in terms of showing statistical significance, when sorting columns and things like that. There should be a minimum # of (X, Y, or Z metric) to activate certain percentages or ratios. When I think of this, I think of how sports statistics work – for example, if a new Quarterback comes in and throws one pass (which is complete), he has a 100% Completion Percentage. He should lead the NFL in completion percentage, right? Nope – there is a minimum # of completions a QB must have (which increases every week) in order to be counted officially, statistically. Google Analytics should employ similar strategies here.

2. For Goal Conversion percentages, I like to use the Goal Verification report in GA, because I often combine two or more Goal URLs into 1 Goal slot. I like how the report breaks down each URL, and to the right of the bar graphs, shows the percentage AND the number of Goals matched, which I really like (and also hope GA can incorporate that into more reports).

3. Compound Metrics – I also am not the world's biggest fan of these, but I do think that the world is big enough for them, and I think they do have their place. Again I'll use a football statistic example, the Quarterback Rating (where 158.3 is "perfect"). I hate how this formula is calculated and structured, yet every NFL analyst / commentator / announcer brings up how great quarterback X or Y is because their QB RTG is 95 or 98 or 102. I would say QB rating is a compound metric, as it smashes together metrics and other historical average figures, but until someone decides to come up with something better, QB rating is the best we have as far as some kind of ranking / rating / index system for evaluating who the best QB is / are (does this train of thought make sense?)

Thanks again!

Compound metrics have caused more pain for me than they have ever caused pleasure.

If you are very, very good at what you do, and you want to create a compound metric for yourself to assist in monitoring your work, fine. But don't show it to anybody else.

When a compound metric changes, you have to deconstruct the components to discover why it changed. Wouldn't it be faster / simpler / better to just track the components?

Great post. When it comes to web analytics, having actionability should always be the bottom line. Too many marketers I work with like statistics that are "nice to know", but you can't do anything with them.

Instead of spending a huge amount of time looking busy and producing numbers that don't help, it seems better to look at isolated areas of the website that need to be improved. I think you said "context is king" in a post a while ago, and this post just reinforces it even more with different common KPIs people use.

Thanks again Avinash. Thanks for mentioning my question in the last post, I hope it helped someone else as much as me.

Hi Avinash and have to say that nothing else could stop me from my running around the company to solve things, but a blogpost from you.

Just a hint about not being able to sort data in Google Analytics after bounce rate. Actually you can do it and quite in a relevant way. :)

I usually select the first 25 or 50 entries in a report according to a metric that matters to me: let's say top 25 landing pages according to the number of visits. Than I sort only this list according to the bounce rate using the following Grease Monkey: http://andrescholten.nl/index.php/google-analytics-tabellen-sorteren-verbeterd/

I like it more sorted like that :)

If you need someone to help you launch a raid on Google, lock the Google Analytics team and Google Website Optimizer team in a room, and make them play nice together, count me in :) I would love to see more cross-functionality, not just in using statistical models in GA but in seeing complex results of my GWO tests in my analytics dashboards (including segmentation, etc.).

There are so many insights that I feel like I'm just a step away from right now because of lack of communication between the two tools, and it's extremely frustrating.

Avinash, this is a great post on some of the pitfalls one has to be aware of when they step into the Quant/Analytical world. Got to totally agree with you on Averages, Percentage, and Rations. If used wisely, Averages 'can' be used to identify spikes (as in control charts) but you are absolutely right that "the average [of] behavior on my site does not help me to target either the leaders or the laggards". You are spot on the Percentages & Ratios too — they don't drive the bottom line (2/3 and 200/300 may come out the same from a pct/ration point of view but in the latter case I had 297 more inputs and 198 more conversions — as you say, $$ baby :-) ).

On the compound metric, I agree with you that metrics of various kinds should not be put through a processor — you will definitely get a puree' but you will be spending more time trying to make it 'palatable' – time worth spent elsewhere. However, I think different metrics can be weighted differently (based on the role it plays in your business) and maybe, just maybe you can create an index for each of the weighted segment. But then again, if you have the individual metrics why not just look at them individually :-)

Avinash,

Great post, as ever. Just to add a "me, too" to your thoughts about segmentation, may I propose this point as The First Law of Analytics:

"If you don't have segments, you don't have data."

Too many places seem to think they "know" something because they have a number (conversion, visits, whatever) attached to it. In reality, if you don't know what lever to pull to change that number, you don't know anything useful.

Keep up the great work.

I agree with you completely, and follow your advice as consistently as I can, (i've segmented my GA account into bits, and I love the advanced segments feature) however, my day to day existence as an analyst goes something like this:

CEO to Company: This year develop insights!

Me: Ok, thanks to AK I've got a good idea how to do this…

Me to Internal Ad team: If I send you GA tags for our ad urls will you be able to implement them.

Ad team to me: Why?

Me: So we can get clickstream data, and do all orders of wonderful analysis.

Ad Team: You mean tracking clicks?… we already do that, last send we got 4xxxx clicks.

Me: uhhhh… yeah. I see.

I think the problem I encounter is that I won't know what I'm going to get from analysis, in terms of insights, until actually gather the data, and doing the analysis, but a lot of times it looks like I'm going to need results first.

Small wins I suppose, or just the realities of building a data driven culture from the runway up.

Thanks for the insight Avinash, good stuff as always.

And you're right those sweet colored bars in the optimizer are fantastic!

In addition to @Claudiu: check this Firefox extension from Erik Vold:

http://www.vkistudios.com/tools/firefox/betterga/

It contains a few cool Greasemonkey scripts that enhance Google Analytics. And it works without Greasemonkey. (It also contains my column sorter).

Michael: Like you I have also come to "adore" the word directionally! :)

In all seriousness thought I think that word (and the mental model) is the legacy of a world where we did not have enough data and did not know how to do any better. While the world is not perfectly, increasingly that is not the case, we have lots of data, we have lots of different things we can measure.

Directionally should be our last choice, if at all.

Joe: On compound metrics I think there is one big difference between using it in your job and mine, when compared to the Quarterback Rating. We have no idea what they are doing to come up with 95 or 108 for the quarterback rating. While not optimal in that use case it is ok because:

1) they are not trying to get a vast majority of the people to understand the metric (its more like: "just trust us we are smart and we know what we are doing here") and

2) they are not trying to get people to make valuable business decisions based on that metric (perhaps at QB trading time, and even then maybe a handful of people).

You and I on the other hand don't have that privilege. We have to convince an entire organization to take our data, 95 of 108, understand it, apply their business acumen and marketing knowledge, make a decision and go take action. None of this is possible if you are the only one who understands the metric (and even then my "test" in the post still needs to be passed).

No?

Claudiu: I know of Andre's extension, it is wonderful. But having analyzed tons of sites I have become a huge fan of the long tail (be it for keywords or content or whatever else). And in a world dominated by the long tail phenomenon the top 20 or 50 are simply insufficient to identify valuable insights.

We need web analytics tools that are smarter than what's in the market today.

That said what Andre has built is wonderful and I strongly encourage everyone to implement it! Better than what is available by default. :)

Ed: Web Analytics vendors, all of them, have been focussed on just getting data out. A legacy of how the industry was born: "Oh here are some log files. Goodness there is some data in it. Let's make tables and graphs from what's in there."

Of course it is a crime of a higher oder if you are paying large sums for a web analytics tool and it is only smart at collecting data, but "dumb" at using well established mathematical intelligence models!

But I am confident that we (free or paid tools) are headed in the direction of competing on providing intelligence instead of the fastest way down based on who can collect the most data.

Julian: Using % change over time is a good way to understand the trend for one metric. If you completely remove the absolute number for that metric (something I have advocated against in this post!) then you get the added benefit of people not obsessing about the raw number. In selected cases that can be such a blessing!

Ned: Love how you ended your comment! : )

-Avinash.

Great post Avinash! I am trying to setup some advanced segments in analytics to filter bounce rates so they only show when visits are over a certain level (in essence to solve the problem you mention above) but I am struggling.

Can you offer an assistance to an Analytics wannabe?

Excellent points. Unfortunately, i am also guilty of often taking the easy way out and using all of the above KPIs. One caveat – i always try and trend my data for reference. At least this way I know if my "KPI" in moving in the correct direction (correct being subjective).

just a thought

aseem

Aseem,

I find sometimes that with niche sites, the main problem with using more advanced KPIs is that there isn't enough data to make it feel concrete.

I think using those 4 measurement techniques are simply part of the journey for those new to analytics.

Avinash perhaps you at some early stage in your career also thought you could make a big change using exactly those? I did and it comes with experience and knowledge to be able to realise your mistakes.

Articles like this are fantastic in assisting with the knowledge building for ninja-apprentices but sometimes experience is required to understand why things are 'recommended' to be done in a certain way… 5 years ago I just wouldn't have understood this so well, without having made those same mistakes myself!

Thanks for sharing these techniques – and for spend your time writing this helpful post.

This post is another prove that without segmentation we don`t have nothing. Custom averages and ratios become so sexy after the segmentations that you use to apply on your site.

I don`t want to escape from the focus, but please let me ask: You should think about to write a topic with your usual great insights about Advanced Segmentation and Filters. When you rather to use each one? How far we can work with Advanced Segmentation without using Filters?

Oh, a last question: Did you check your title for this topic? It is "Four Not Useful…" Shouldn`t you remove this "Not"? :)

Thanks

Dio.

Thanx Avinash for an another great post. Love to read it.

I agree that segmentation makes a lot of metrics meaningfull. I always check of a %delta is significant but it is never the less a great one to see of somenthing may change or is changing.

I never use ratio and in your example it looks like a conversion percentage and on its own not an actionable metric.

Just one thing Avinash is there realy no compount metric in the world that just says SUCKS or GOOD for the whole website. I just love the think there is because the quest to find him is great fun!

I humbly second the "request" for an article on advanced segmentation! Also do you think that an increase in keyword scope (the number of traffic generating keywords) can be considered a useful KPI? Or is it meaningless without taking bounce rates etc. into account? It is one I use a lot when reporting to my clients but I wonder if in fact it is useful at all!

Thanks for the good insight avinash. I particularly like your discussion of statistical significance.Great stuff and sure i will keep tracking your future updates.Thanks for the effort made..

Fantastic post!

"Average" is misleading for the most part because it is used to describe data that are not bell-shaped. This is where segmentation becomes key: as you segment, the data should become more normal, more bell-shaped, because the population self-selected by keyword, campaign, etc…. You can use median to describe asymmetrical data since calculating the median takes the effect that extreme observations have on the mean out of the mix.

Any analyst who doesn't use Excel's Data Analysis add-in isn't really doing analysis. In terms of identifying notable (significance is a whole other matter) values, here's a (relatively) quick way:

1. Select the series

2. name the series, e.g, "orders"

3. calculate the mean

=average(orders)

4. calculate the standard deviation

=stdev(orders)

5. determine the skewness of the data (the degree of asymmetry)

= skew(orders)

6. calculate kurtosis (how peaked or flat the distribution is compared to normal)

= kurt(orders)

7. calculate a "normal" flag

=If(and(abs(skew(orders)<=1,abs(kurt(orders)<=1),1,0)

8. find quartiles 1, 2, and 3

=quartile(orders,1)

9. calculate the interquartile range (IQR)

=quartile(orders,3) – quartile(orders,1)

10. calculate the "step"

=1.5 * IQR

If the data set is near bell-shaped, you can use the standard deviation to determine outliers, otherwise use Tukey's Rule.

11. Find the threshold for lower outliers (using names for cells rather than references)

=if(normal=1,mean-(3*stdev(orders),quartile(orders,1)-step)

12. Find the threshold for higher outliers

=if(normal=1,mean+(3*stdev(orders),quartile(orders,3)+step)

Now, what complicates things is if the series above shows a trend over time. In that case, you can not use the above descriptive statistics. Moving averages are your friend.

I'm by no means (pardon the pun) a statistician, not be a long shot; but if we're going to throw numbers around we better make sure we know what we're talking about. I highly recommend "Data Analysis in Plain English" by Harvey J. Brightman.

I agree to your point that averages lie and have become a big believer after reading your book Web Analytics:An hour a day. However, the problem that I face is people in my company are stuck to averages because unfortunately that is how even the biggest companies operate i.e average conversion, average sale, average bounce and so on. I am getting pushed for using a fixed average conversion number for conversion goal and not sure what should I do about this. Any tips?

Richard: When you segment visits it is important to know that each visit (session) is either bounced or not.

The segmentation that you'll do of the visits is hence a binary function (and not a "filtering function", as in 50% bounce rate or greater – you are in that case trying to filter based on a dimension, keywords in your example).

Here is how I could go about identifying keywords that have significant traffic and high bounce rates (because something might be wrong there).

1) Create a segment that identifies the "bouncers". . .

2) Go to the keywords report (for paid or organic) and choose the newly created Bounced segment. . . .

3) Checkout the keywords, sorted by Visits (traffic), that have high bounced visits. . .

What is perhaps even more helpful is looking at the Bounced segment in context of All Visits.

Checkout the % difference in Green in the Visits column. You can quickly see bouncing as a % of total. . .

You can of course apply this methodology to any report (referrers, campaigns etc), and I recommend you atleast apply it to your Paid Search keywords (in the Keywords report in GA just click on "paid") to identify money saving / ROI boosting opportunities.

Hope this helps,

Avinash.

John: This is a brilliant comment, the detailed steps are super helpful to anyone who will read the post. Thanks so much for taking the time to write this!!

Sameer: It is hard to change cultures, don't try to to do it overnight.

My first simple start was to start to show the distributions on the slide (like you see in the post with average time on site). And I had already done some segments of the higher and lower distributions (but they were not in the deck / presentation).

So when I presented the data I could say something like:

"The average is xx and it is yy different from the goal but if you look at the distributions you'll see a different picture. When I drill down to the top dist I found these loser sites / pages and what is most valuable is the bottom dist because it shows what our customers love and what keywords bring those customers."

That starts the process of educating the management, getting change to start, one person / meeting at a time.

These two blog posts might have other ideas that could help:

Seven Steps to Creating a Data Driven Decision Making Culture.

Lack Management Support or Buy-in? Embarrass Them!

Good luck!

-Avinash.

I get Avinash's main point which I think is to put context to the reporting but I am not sure it makes sense to talk about learning, statistical significance, or any generalization of data without using averages or percentages. As I understand it, in order to compactly describe a collection of data it is useful to have its average, its spread, and its distribution. In addition I think that Avinash, is arguing that web analytics data is clumpy and that at least in some dimensions can be better described as if the data has been generated by several different processes (the different user segments) rather than by just one. We then have a collection of conditional averages, measures of spread and distributions. But for all of this you need averages; otherwise we suffer from a level of granularity that masks any structure that might be in the data.

For example take a look at that Google optimizer screenshot. Combination5 has a conversion rate of 16.2%. Now go ask everyone who was exposed to Combination5 who has a conversion rate of 16.2% to raise their hand. How many hands do you think you will see? None, nobody will have a conversion rate of 16.2%, it will either be 0 or 1 (assuming you are exposed only once). But you use the average here to generalize over the treatment group since you assume that since that group was filled randomly the differences in the individuals will average out wrt. to other treatment groups. You then find a weighted (by the two variances) difference between the average of the control and the treatment and perform your hypothesis testing. On a side note, I am not too sure that hypothesis testing is really the optimal way for web site optimization.

Another example, the distribution of time on site shows how the data is spread but note, this data appears to be drawn from all users – it represents the distribution of the time on site of the average user. Average and useful. What might also be interesting is the see if this distribution looks the same for each segment – so you have several distributions. Of course following on the push for significance testing you now have to figure out if these differences are due to some structure/signal or just due to noise. How do we do that – hmm, gets a little more tricky – maybe chi-sqr goodness of fit or use KL-divergence (not a metric of course but useful).

Anyway, thanks for moving the conversation in this direction.

I'm puzzled, since this seems to go totally against the grain of the "other" web analytics guru, Eric Perterson, who has been "evangelizing" KPIs that are constructed of Compound Metrics.

I've been working with a client who's needs require that we report using both "Eric Peterson style" KPIs (Averages, Rates, Compound Metrics) but also include several raw usage data points in order to provide additional context. Am I steering them down the wrong path by combining both approaches?

Hi Avinash! Thanks for the Great post!

For Number 2, "percentages", did you photoshop that picture with the "blog conversion rates with Raw Values"? or is it actually available somehow with GA. I haven't been able to figure out how to get that view.

I noticed you say a bit under that: "I can’t do that in Google Analytics. Quite sad." but I think you are talking specifically about the filtering statistically significant data (which I have been waiting for quite some time, any idea if it will ever be available?).

I would love it if you could add a small tutorial on how to set it up the raw conversion numbers if it exists.

Thanks again!!

Matt: I think we are on the same page, though I think in my post I might not have done as good a job as I thought I did.

I am not asking people to give up on averages, percentages etc.

I am saying don't use them as they are presented to you, that they could be presented to you much better and if you do use what is presented then segment, look at the distributions, if you compare two segments then don't jump to conclusions without checking statistical significance, use raw numbers to get opportunity context etc etc.

I think that is what you are saying in your comment.

My overall point is tools today simply puke data out, hence use it with caution and if you don't then you should not be surprised if you don't find actionable insights.

I agree that the sophistication required is only at a early evolving stage in most web analytics practitioners, it will get better over time.

Mark DiSciullo: There is one and only one way to know if what you are doing is right.

Step 1: Do it.

Step 2: Apply this two part test.

A. You got actionable insights that you applied and added value to your business.

B. You really enjoyed the process of crunching the numbers.

If the answer is A. What you are doing is right (regardless of what anyone says or thinks).

If the answer is B. It is good you had fun, but move on.

If the answer is A and B then you are the rarest of the rare: A Ninja!

Oggy: No photoshop! That would be evil. :)

I use this extension (a combination of Greasemonkey and Firefox):

http://www.vkistudios.com/tools/firefox/betterga/

It gives you that and a few other nice features.

It was mentioned in the comments, but I should also have linked to it (done now!).

-Avinash.

Avinash,

Extremely useful !

This is a post to put on my wall next to my desk to remind me of how to do it properly. For averages and family are so attractive …

Is there an inverse relationship between Analytics ability and grammatical correctness? ;)

Just kidding! Fan-tas-tic post (and fantastic-er comments)! Thanks for bringing it to life!

Great post Avinash! The part I like the most is that we need to present every number in context and unfortunately this doesn't happen a lot. We are surrounded with numbers we don't understand (and I personally question everything that doesn't come down to simple 2+2:)) – starting with APR that is higher than the interest rate to the 400% conversion growth (which actually is growth from 2 conversions to 8:))

But I think we suffer more from people's personality – there are BI teams that crunch numbers that nobody understands but when yo ask them what they mean they look at you with that look "you fool, how come you don't know? don't even dare to speak to me – this is the ultimate metric"; there are also managers, who want to tell their managers that they contributed to 400% growth so they can get the biggest pie; there are people who feel scared to ask, because they don't want to look stupid; and there are the rest of us, who try to raise their voice and do the best for the business.

Your post reminds me on the "SEO Gap" metric I wrote you about in an e-mail – when I thinkaout it it sounds to me like a compound-parcentage – what about that? :)))

Awesome post

I'm a statistic from Brazil and work with Business Intelligence. As an statistic, I always tried to put my knowledge to work with Web Analytics but here in Brazil this type of analysis are not so relevant to the clients and agencies (not for me). There are infinity of possibilities in Web Analytics with heavy statistics, and I'll put it to work together against all the squared web analytics minds. Thanks for the insights.

This is a great article and well thought out. Key performance indicators are difficult to work with in general because they are trying to condense a lot of information into a single number. Whether this is done through averages, percentages, or the result of some crazy compound metric, they all can erase the context that creates meaning around the data. Your simple techniques to reduce but still retain context is great insight. Thanks for the post.

Ben: Nope.

But there is certainly a inverse relationship between time available to write and grammatical correctness!

Toddy: The first part of your comment is the reason I tried to stress the use of raw numbers, they'll give context to the 5000% improvements or declines.

Your stress on the culture as the key is very important. I have found that it is rare that numbers or the magnificence of numbers is key. The company culture, group dynamics have so much more of an impact.

Now if I only had a penny for every time someone said to me "you fool, how come you don't know? Don't even dare to speak to me – this is the ultimate metric". :)

-Avinash.

Great post Avinash!

One thing I noticed – Under your explanation of percentages, you mention a tool that shows number of conversions instead of just percentages. In the screenshot this appears to be from Jeremy Aube's Report Enhancer script (from ROI Revolution), not from the VKI's Better Google Analytics script. I know this is minor, but Jeremy put a lot of effort into his script, and think he deserves a mention.

Thanks again for the great article!

This is all so good to know! It's hard to resist the temptation to use overall averages in KPIs – and ignoring how little that really can mean. I think sometimes the hard part is convincing the people in charge of that. Thanks!

You're really good with this kind of explaining. With complete information and visual effects, one can say you really did your homework good. Another warm "pat on your back."

Great info Avinash! I am so glad I ran across your blog… I'll be back.

ATTENTION VENDORS: For you dear friends, this has to be one of the most valuable blog posts ever written. It's time we stand up and stop being Number Bunnies and start supporting the needs of them Ninjas. I can't see any tangible reasons why the juice of these simple ideas wouldn't be implemented in all reputable Web Analytics tools by the end of the year. And really, we don't have to stop at these specific examples, but rather apply the mindset to all the numbers we use.

And there is more. We already have a clear vision of the future (not just Web Analytics but all data driven web applications). There is few key things that should be ever present with data:

1. What the data really means (explain if needed, put it to context and rate how significant it is)

2. How it has changed and what can be expected in the future

3. Suggest and allow actions right there and then (and again, make sure the user knows what to expect)

4. How competition is doing with this same thing

And this is where the job gets fun…

I have always hated the averages when it comes down to genuinely finding what people want as oppose to how many are coming etc.

In its most basic form I would say picture it like this;

You have 100 visitors, 50 visitors bounce, 50 visitors see 5 pages. the average page views will be 3 pages per visit so you are investing your time and efforts on this presumption that everyone sees 3 pages.

If you segment and lose the averages you will spend the most productive time in both dragging in more of these 5 pages viewers and more time improving the bouncers.

Excellent article Avinash – really clued me up on some of the more obscure aspects of Analytics. The free firefox extension "Better Google Analytics Firefox Extension." is a real bonus – make it so much easier to present data to clients – nice one matey.

Jim

A very good article Avinash. It concisely documents some cases leading to Analysis Paralysis.

The Firefox extension is a bonus takeaway from this post.

An excellent article – but I'm not sure you're using Google Analytics correctly. Instead of looking at the top ten results only, which of course will only show the terms with a bounce rate of zero… set the number of items per page way higher (i.e. 100) and look down until you find some statistically relevant data… it is in there.

I'm trying to better understand the process of user segmentation. Do WebTrends and/or Omniture Sitecatalyst have the ability to do these types of segmentations(return visitor, new visitor, organic search, etc…) or do I need to upgrade to something else to be able to do this?

I really love this blog, such amazing insight into the world of Analytics. I long for the day where my role moves from that of a report monkey to that of a analysis ninja.

Thanks

Yass: You should be able to do all the things, 100% of the things, with Omniture or WebTrends. I also do not believe that you have to upgrade or pay more (because one can do these things with free tools like Yahoo! Web Analytics or Google Analytics).

Just call your web analytics tool account representative.

-Avinash.

Yass: Adding to what Avinash said yesterday, you can absolutely do these things using SiteCatalyst. Correlations and subrelations allow you to break down critical reports by various data points such as organic/paid search, campaigns, etc. Where a certain data point isn't already available, you can almost always implement a bit of JavaScript code, such as a plug-in, to capture what you need so that important data can be segmented by it.

Discover, Data Warehouse, and ASI offer additional segmentation capabilities, and if you have these tools on your account, I highly recommend them, but they aren't entirely necessary for good segmentation. SiteCatalyst can do it quite well when the implementation is solid.

Feel free to let me know if you have any other questions about this.

Thanks,

Ben Gaines

Omniture, Inc.

e-mail: omniture care [at] omniture [dot] com

First off Id like to say Great Article. I went and downloaded that FireFox plugin for google analytics and started toying around with it. I have to admit it took sometime to get everything situated and set up to where i can make sense of the raw numbers relative to the goal conversions. But know that I have a firmer grasp on the basics I think that it has really opened a new insight into my goal conversions and the way i look at analytics. Well this entire article helped with that ;). Anyway thanks for the great post!

I can't figure out how to create the "Length of Visit" report you have in the averages section. Could you please give some quick pointers on steps to take?

Yulia: If you are using Google Analytics it is a standard report.

In the left navigation go to:

Visitors -> Visitor Loyalty -> Length of Visit.

If you are using a Yahoo! Web Analytics go to:

Navigation -> Time Spent On Site.

If you are using a different tool please check with your vendor.

-Avinash.

Thanks so much! Found it!

I know this is an old post but still :-)

I tend to agree that compound metrics are not a good thing. But don't you think sometimes it is necessary to break away from what the tools provide and you could still find it useful to come up with a compound metrics which more cater to your business?

Raghu: I am confused by your comment.

Whether compound metrics are good or bad has nothing to do with tools. It has to do with your ability to use data (and key performance indicators) to 1. understand performance and then 2. take action.

If you can address the limitations outlined in the post as related to compound metrics than it might be a good measurement technique to use in your case.

Avinash.

Compound metrics have their place in KPI analysis, like most calculations you can always cite their limitations.

I've been taking interest lately in numbers that mean nothing. It's always been a big deal in web marketing, since the days when people regularly reported "hits" (wince). We love to distill.

There is a book (which I have't read but heard an interview of the author) called The Flaw of Averages. Love the title. And another book, which I am reading now, Moneyball. Both are about finding the truth in the numbers. I am surprised at how instructive Moneyball is for analyticals.

Great post.

KPI Measurement Techniques is a very delicate topic. And I believe that it really depends on the business culture that is practiced to determine the best KPI measurement techniques for each company.