Someone asked me this very simple question today. What's the difference between web reporting and web analysis?

Someone asked me this very simple question today. What's the difference between web reporting and web analysis?

My instinct was to use the wry observation uttered by US Supreme Court Justice Potter Stewart in trying to define po rn: "I know it when I see it."

That applies to what is analysis. I know it when I see it. : )

That, of course, would have been an unhelpful answer.

So here I what I actually said:

If you see a data puke then you know you are looking at the result of web reporting, even if it is called a dashboard.

If you see words in English outlining actions that need to be taken, and below the fold you see relevant supporting data, then you are looking at the result of web data analysis.

Would you agree? Got an alternative, please submit via comments.

I always find pictures help me learn, so here are some helpful pictures for you. . .

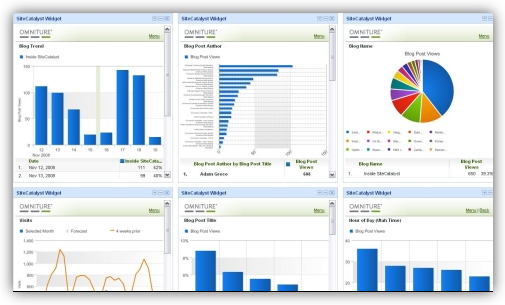

This is web reporting:

And so is this, even if it looks cuter:

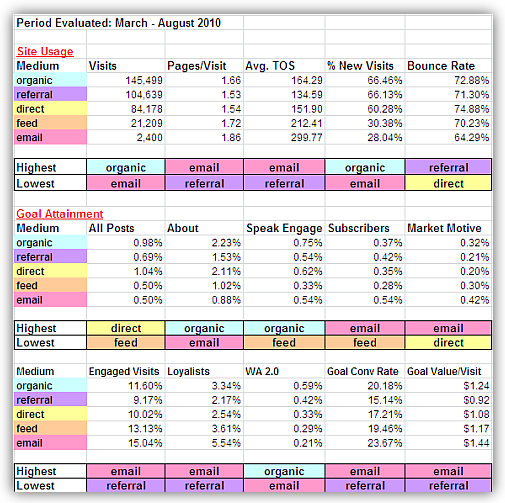

And while you might be tempted to believe that this is not web reporting, with all the data and the colors and even some segments, it is web reporting:

See the common themes in all the examples above?

The thankless job of web reporting, illustrated vividly above, is to punt the part of interpreting the data, understanding the context and identifying actions to the recipient of the data puke.

If that is your role, then the best you can do is make sure you have take the right screenshots out of Site Catalyst or Google Analytics, or charge an extra $15 an hour and dump the data into Excel and add a color to the table header.

So what about web analysis?

The job of web analysis mandates a good understanding of the business priorities, creation of the right custom reports, application of hyper-relevant advanced segments to that data and, finally and most importantly, presentation of your insights and recommended action using the locally spoken language.

See the difference? It's a different job, requires different work, and of course radically different skills.

Examples of web analysis? I thought you would never ask. . .

This is a good example of web analysis:

[And not only because it is my work! Learn more about it here: Action Dashboard.]

Notice the overwhelming existence of words. That's not always sufficient, but I humbly believe always necessary.

When you look to check if you are looking at analysis or reporting look for Insights, Actions, Impact on Company. All good signs of analysis.

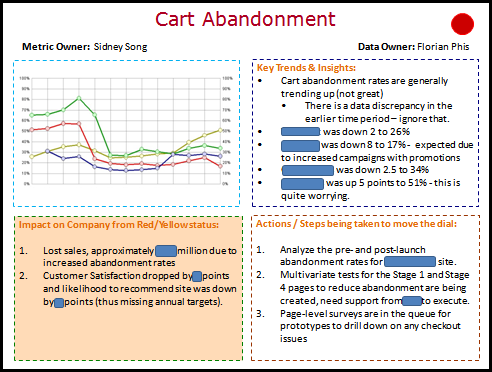

Here's another example of really good web analysis:

[Click on the image above for a higher resolution version.]

Ignore how well or badly the business is doing. Focus on approach taken.

Here are some things that should jump out. . . . A deliberate focus on only the "movers and shakers" (not just the top ten!). Short table: just the key data. Most of the page is taken up with words that give insights and specific actions to take.

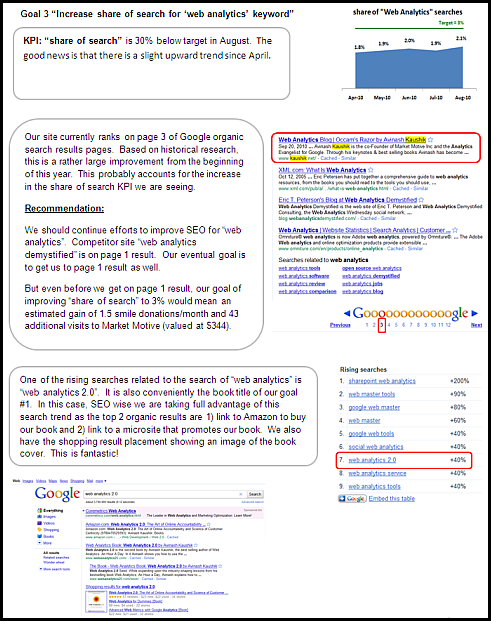

Another example that I particularly like, both for the style of presentation and how rare it is in our world of web analytics. . .

[Click on the image above for a higher resolution version.]

No table, no rows, no pies. And yet data holds center stage with clearly highlighted actions.

Normally, we all do the column on the left (it might look different, but we have it). Unfortunately we don't appreciate is the power of the middle column ("segmentation reveled"). That is super important because it gives the recipients exposure to the hard work that you have done and in a very quiet ways increases their confidence in your work. Guess the outcome of that? They take the actions you are recommending!!

Analysts constantly complain that no one follows any of their data-based recommendations. How do you expose your hard work? In a garish Las Vegas show girl fashion where all the "data plumes" are, unsexily in this case, hanging off the body? Or, in quite concise ways? Only one of those two work.

One more? Okay here you go. . .

[Click on the image above for a higher resolution version.]

Diana has loads of observations, supported by visuals (sometimes it really helps to show the search results or the emails or the Facebook ad) with highlights (actually lowlights) in red, and finally recommendations.

And note the tie to outcomes (another common theme in all examples above). In this case, the search improvements are tied to the increase in donations I can make because of sales of my book. 1.5 extra smiles per month! (All my proceeds from both my books go to charity.) A good way to get attention from the "executive" and get him or her to take action.

Do that. A lot. Be creative. Yes it is hard work. But then again glory is not cheap, is it?

Exceptions to the rule.

Not every output you get from your Analyst, or "Analyst" :), with loads of words on it, instead of numbers, will be analysis. Hence my assertion that "I know it when I see it." Words instead of data pukes is just a clue, read the words to discern if it actually is analysis or a repeation of what the table or graph already says!

In the same vein not every output that is chock full of numbers in five size font, with pies and tables stuffed in for good measure, is a representation of web reporting. It is hard to find the exceptions to this rule, but I have seen at least two in nine years.

Top 10 signs that you are looking at / doing web analysis.

Let's make sure this horse is really and truly dead by summarizing the lessons above and using a set of signs that might indicate that you are looking at web analysis. . .

#1. The thing that you see instantly is not data, but rather actions for the business to take.

#2. When I see Economic Value I feel a bit more confident that I am looking at the result of analysis. Primarily because it is so darn hard to do. You have to understand business goals / outcomes (so harrrrrd!) and then work with Finance to identify economic value, and then you have to configure it in the tool and then apply advanced segments, and then figure out how things are doing. That is love. I mean that is analysis! Or at least all the work that goes into being able to do effective analysis.

#3. In the same vein, if you see references to the Web Analytics Measurement Model (or better still, see it in its entirety on one slide up front), then you know that the Ninja did some analysis.

#4. Any application of algorithmic intelligence, weighted sort, expected range for metric values (control limits), or anything that even remotely smells of ever so slightly advanced statistics is a good sign. Unknown unknowns are what it's all about!

Also mere existence of statistics is not sufficient. All other rules above and below still apply. :)

#5. If you see a Target mentioned in the report / presentation, then the Analyst did some business analysis at least. See the top right of the picture immediately above.

#6. Loads and loads and loads of context! Context is queen! Enough said.

#7. I have never seen web analysis without effective data/user segmentation. I think this statement is in both my books. . . "All data in aggregate is crap." Sorry.

#8. If there is even a hint of the impact of actions being recommended then I know that is analysis. It is hard to say: I am recommending that we shift this cluster of brand keywords to broad match. It is harder to say: I am recommending. . . and that should increase revenue by $180,000 and profit by $47,000. Look for that.

#9. If you see more than three metrics in a table you are presented with then you might not be looking at analysis.

#10. Multiplicity! If you see fabulous metrics like Share of Search (competitive intelligence) or Task Completion Rate (qualitative analysis) or Message Amplification (social media) then they are good signs that the Analyst is stepping outside Omniture / WebTrends. I would still recommend looking below the surface to ensure that they are not just data pukes, but the good thing is these are smarter metrics.

User Contributions:

#11. From Carson Smith: If someone looks at your analysis / report / presentation / dashboard and has to ask "and… as a result?", then it might be reporting. What happened should be obvious.

[I love applying the "Three Layers of the So What" test to any analysis I present or see. I ask "so what" three times. If at the end of it there is no clear action to be taken then I know it is just web reporting, not matter how great it looks or how much work went into it. Ask "as a result?" or "so what?" to your work!]

#12. From Chuck U: 1) If it can be automated, it's probably not analysis 2) If your data warehouse team says they can automate it for you, then it's definitely not analysis. [#awesome! -Avinash]

Can you think of other signs? Please share your suggestions via comments. I'll add the best ones to this list.

In the list above, and in the examples in this post, you see my clear, and perhaps egregious bias for business analysis and business outcomes and business actions and working with many parts of the business and business context. But I've always believed that if you and I can't have an impact then why are we doing what we do?

I hope you've had some fun learning how to distinguish between web reporting and web analysis. It is a fact of life that we need both. The bigger the company, the more they want data pukes, sorry, reporting.

But if you have "Analyst" in your job title then you perhaps now have a stronger idea of what is expected of you to earn that title. If you have hired a "web analysis consultant" and are paying them big Rupees then you know what to expect from them. Don't settle for data pukes, push them harder. Apply the rules above. Send their "analysis" back. Ask for more. Raise your expectations!!

I hope now "you'll know it when you see it," and have more datagasms!

Okay, it's your turn now.

How would you answer the question about the difference between web reporting and web analysis? What signs do you look for when evaluating the work of your Analyst or Consultants?

Please share your thoughts via comments below.

Thanks.

PS: In case you are curious here's the current official definition of po rn, as outlined in Miller v. California:

(a) whether the 'average person, applying contemporary community standards' would find that the work, taken as a whole, appeals to the prurient interest,

(b) whether the work depicts or describes, in a patently offensive way, sexual conduct specifically defined by the applicable state law, and

(c) whether the work, taken as a whole, lacks serious literary, artistic, political, or scientific value.

Via

Via

I had a history teacher who would mark "as a result??" in papers anywhere facts were presented but not analyzed in context of a thesis. Fearing a now non-existent red pen, I still find myself using this phrase in my analytics reports nearly a decade later. But the deep-thinking thesis tends to be replaced with a more superficial business goal.

I'm not sure how thrilled my teacher would be about that part :)

Oh Dear,

I fear that the marbles may still be in the master's hand.

This young grasshopper needs to get his dashboards up to scratch.

A relevant aside; if you are a web analyst that's always regailed to being a reporting squirrel, don't get discouraged by inaction. Try outlining your recommendations to stakeholders instead of your bosses boss. Your peers may hate that you are criticizing their work, but they will respect you in the long run for approaching them instead of their superiors.

Context is a godsend FYI. When we do make insightful discoveries – make sure to leave the egos out of the office. The easier the analytics is to digest, the easier it will be to make information tribal. Silos always prevent action.

Thank you for highlighting the "Analytics" in Web Analytics.

I am constantly looking for new ways to slice and dice client data to get at actionable insights but I don't always present it in a way that the action steps are obvious.

This gives me some ideas about how to better present my findings and drive real business outcomes ($$$). Keep up the great work!

Anthony

This is great. Its often hard to distinguish the two until you are faced with a large amount of data puke.

When teaching the difference to my team, I've found the best method is to start with the report and then layer questions on top of it that will force them to analyze.

1) If it can be automated, it's probably not analysis

2) If your data warehouse team says they can automate it for you, then it's definitely not analysis.

Alas, in the past I have been guilty of just data puking. Fortunately, in my evolving transition to analyst, it only seemed right that I give my opinion about the "why's" of the data; tying them into business goals is, well, the reason we're all here!

Thanks Avinash for making very clear examples of what good analysis looks like. This fills a gap I didn't know I had until I saw it! (heh-hey)

Part of our delivery model, reporting-wise, is to never deliver emailed reports, unless they are directly accompanied by a real-time conversation (call, meeting). Most of our clients have direct access to their own analytics, so they can go print ad hoc reports whenever they choose to. I struggle with this dedication to context in our business model – but I think your point reassures me to hold fast to this principle.

I would say it's less about analysis than it is about context. Our reports contain varying amounts of analysis (based on your characterization above), but are delivered in a way that means a consistent/persistent adherence to context. Taken out of context, many of those reports are as vanilla as can be. Any machine (or automaton) can give us numbers, and anyone with a keyboard can write numbers into a bulleted list, but doesn't the real value come from applying context? Context *is* queen, but isn't it also king, prince, and populous?

Thanks for the reassurance anyway :-) It's really, really hard to compete with software…and the floors of "analysts" supplying the grist. I often wonder if we're doing the right things.

This blog keeps me humble. First you say we have to find the right metrics. Then you say we have to segment them. Now you're saying that how we say it is just as, if not more important?

Jokes aside, I have a few reports I need to cull and turn into analyses.

Thanks for the constant inspiration, Avinash :)

(And thanks for the validation, fellow blog commenters)

Great article on how to make the jump from reporting to insights. As an analyst working in the area for a few years, I have been looking/improving ways to best present the insights, I'm happy to see this article is exactly what I need.

To other readers: I want to stress that "creativity" doing the analysis. The master Avinash showed us the door, the real work is for us to understand the business needs and priority, and create your own solution that best help your organization.

For your top 10 signs that you are doing analysis, I might add a listing of the previous recommended actions – which ones were acted on, and which ones are just another long to do list?

Then current actions, so you compare, and basically have a chance to question each of the previous recommended actions for activating, or deletion…many times people give themselves way too much "to do's" and don't remember what they planned last month, last week, or last year.

@Chuck U.

I don't necessarily disagree with you. But I think there are a lot of automated reports that can prove VERY useful in generating insights – after you do the leg work to make them useful. Maybe the "stock" reporting suites are not useful automated dashboards, but in most analytics platforms you can customize reporting & automate them to generate useful web insights.

Example: putting in COGS data into Omniture & comparing Adwords campaigns segmented by specific products, or maybe pairing NEW visits to Uniques and then segmenting by marketing channel in GA? Those are all reports you can automate.

Thank you for the incredibly timely (and as always, useful) reminder that data puking is not analysis.

I think it's important to educate our stakeholders – to wean them off the need to see "all of the data" and instead help them understand the power in seeing segmented data with actionable insights.

Carson, Chuck: Absolutely loved your ideas. I've added them to the main blog post body. Thank you!

Craig: It is such a delight to know that in a small way the blog post helped expose some "unknown unknowns" to you! I am glad the practical examples were helpful.

Gary: I can confirm that the approach you are taking is highly unusual, and totally awesome! So many consultations horde the client's data and access to data. This perhaps comes from a fear that if the client will self help with the reporting part than that they won't need the consultant.

That actually is true.

But if the consultant is good, even decently so, they'll of course realize that there is a lot more money to be made in providing analysis that drives change. But because doing the latter is harder to do, consultants are happier making $50 an hour when they could do 3x the amount of work but charge 5x of $50!

Grant: Fabulous point about having to be creative and then crafting a solution that is unique to each business. Even for two companies that are in the same business, solutions for analysis are typically radically different.

Nelson: I am afraid I am with Chuck on this. If you can automate it then it is probably a very good report, but it can't be analysis. There are so many things that go into analyzing data: tribal knowledge, fast changing context, this week/month's company priorities, changes to the competitive ecosystem, flavor of the month marketing efforts, newly implemented programs and so so so much more. None of these can be automated.

So from a standard data puke you can move to a really nice "data snapshot of things we cared about last month" using automation. But then you (Analyst) needs to start their work and present the analysis, perhaps in one of the formats in the blog post.

Sara: Great point!

A little while back I had a blog post called Six Rules for Creating a Data Driven Boss. That has some tips (in the same spirit as your comment) to make that key shift in behavior of our leaders.

-Avinash.

This is great idea to present reports, one of the challenges is to translate the puke of data into what the CEO's is really interested in, and assist him into steer in the right direction.

I have been trying A/B testing on them just to get the right one!, I find it the simpler the better, and resist the urge to over report!

Thanks again Avinash.

Hmmm…new axiom?

Under-report, and over-deliver?

Avinash,

Incredibly useful and helpful blog post! I like the elements in your posts that bend my thought process a little bit. Your analysis slide on the 'Survey and Web Analytics: What We Learned by…' did that for me today. Thanks for multiple examples of analysis presented.

I think that it's important that when you make recommendations that you identify what you expect to happen if your recommendations are implemented as a result. It is really like creating a testable hypothesis. The follow up to implementing your recomendation should be to monitor to see if the expected result is achieved. You really need to spell the expectation out and not assume that it is implied. This is what you expect and this is what you will test for to see whether your recommendation was successful or not. If necessary, this can also help to justify & prioritize the implementation of recommendations. Which recommendations do you expect to get the most bang out of? Your #8 mentions identifying the impact of recommendations as being a sign of analysis. I agree, but I think that it should be mandatory to identify expected results for the reasons above.

Side question…do you have any favorite resources to help support people becoming better analysts? Blogs? Books?

Great post, Avinash!!!

Alice Cooper's Stalker

Hello everyone,

One of my college professors always said about reports that she never wants to assign an "eye" test. She knows we can all see and read – what she wanted was independent analysis!!!

This post and these comments remind me of that statement.

Random thoughts + comments that have popped up in my brain while reading the blog post and commentary:

1. Sometimes your boss / client simply wants the report, period. This is always tough for that ninja inside of us that is trying to slice his way out into the forefront. You will have to use your judgement as far as timing on introducing your analysis into your reporting.

2. That brings me to how you deal with your boss / client / colleague. They didn't tell us, when we signed up to be a WA ninja, that we'd also have to be a master psychologist of the human condition. But guess what? Knowing how to approach someone with your analysis will make it or break it for you. You can't always do the "hard-sell" thing to someone who is completely put-off by it, and you can't always reverse-psychology it to someone whom that doesn't work for.

3. You also will HIGHLY benefit from putting on your educator / professor hat. Even if you are someone who has always done "Reporting" as Avinash defines it, your knowledge level most likely supersedes that of the person to whom you are analyzing to. This is another opportunity for you to let your client / boss / colleague know that you're not just defining and reporting metrics, and that you're actually doing some seventh-degree black belt ninja type stuff!

Very basic example of what I mean:

Question: "What does "Bounce Rate" mean?"

Non-ninja reply: "It's the percentage of single-page visits to your site".

Ninja-reply: ^ All of that, + "We're going to use it + other figures together to evaluate your web site page's performance as points of entry etc…etc…etc…"

Don't discount the power of what I like to call "verbal" analysis and insights. It's the stuff that doesn't show up in the box score, but goes a long way.

4. Keep fighting the good fight. Tools give you pounds of fancy reports, data, alerts, etc…true analysis takes time, patience, a pinch of cunning, a dash of "out-of-the-box" thinking…but most of all, the tool that has helped me the most up to this point has been my ability to be a "people person" and like Avinash mentions, "…using the locally spoken language". That is HUGE folks!

Thank you much!

In other words, “don’t make me think” (with thanks to Steve Krug). This is especially important the more senior the level of the recipient.

Sending web reports means the recipient has to do something with the data, get overwhelmed and not do anything with it. Sending analysis gives the recipient paroxysms of joy, jumping around their office screaming "do this NOW!". Company makes loads more dosh, you are automatically legendary :)

A word on, well, words – making recommendations does not include answers like this when someone asks how many hits the website gets "I’m afraid we no longer measure hits, but 1235689 people visited the site and viewed 1515151 pages. Of these visitors, 53% came from search. 93% of these were from Google, with 17% of traffic from the keyword "pedagogy", whilst the biggest referrer was hugereferrer.com and the main component of direct traffic was thought to be the DG’s weekly broadcast email. Of the 70% of new visitors, 83% were from Australia, 10% from the US and 3% from India…" This is basically a data puke in a sentence – and I have to admit is the kind of stuff I would pass on as a report a few years ago (and yes, people still ask about hits!)

Dear Avinash:

This post arrived just in the right moment for me.

I'm participating, as teacher, in an executive education course on Interactive / Digital Marketing in Mexico and last Saturday we discussed about the importance of focusing on what really matters for different business models. Your post will be very helpful for adding new ideas to the discussion.

Thank you!

Web analysts need to read this to really understand if they are doing work to the true meaning of their title :-)

The one thing I find it hard to understand a lot of times is the fact that reporting and analysis is so much misunderstood.

Thanks for the great post.

Avinash: You are the man!

The difference between web report and web analysis in my point of view:

Web report = data

Web analysis = information

To make a good analysis you must have good reports. You can’t depreciate reports because they are the base to make a nice analysis, and you need to have the right data to show concise results to your clients/directors.

I hope that what I say here makes sense!

Regards,

Gary: It took me a second to get it.. but I love your axiom: "under report", and over deliver!!

Alice Cooper: A list of my top ten fav blogs are on the home page right nav (towards the bottom). They are blogs that inspire me. Here is my blog post on how I personally expand my knowledge and try to become better:

~ Web Analytics Career Advice: Play In The Real World!

But overall it just takes constantly being in a receptive business environment and applying a structured thinking to existing solutions that leads to becoming a better Analyst. I realize that sounds like such absolute common sense!

Joe: I agree with you that you don't want to hard sell. You have to get them to a point, gently, where they want to have analysis (so much that they demand it!). : ) Some tips on this are in my Six Rules to Create a Data Driven Boss post.

Communication (your tip #1) is sooooo important. Sometimes the baby does not want Spinach and just wants Chocolate. But we have to figure out how to get the baby to like Spinach! :)

Jon: We have complete shared vision, in my two "exceptions to the rule" number one was that just the presence of the words does not equate analysis. As you have beautifully demonstrated, using hits no less (!!), words can be very effectively used as data pukes!

Luiz: I concur with you that you can't escape from reports. At least a few focused relevant customized reports are probably a key input into the analytical process. They become a great start of the journey (sadly we often mistake them for a destination).

-Avinash.

Thanks for explaining the complicated process of web analytics in simple words.

Really appreciate it very much. :)

I like words more than numbers- words about numbers I can live with:) Much better than scary Excel sheets!

Thanks.

I can say, web analysis is the process of understanding the various activities like traffic, user activities etc. and finally ROI later on preparing a standard document based on that and sharing it with people that is called reporting, so that they understand it easily and based on that they provide their inputs that help in reaching to the conclusion.

Hi,

Short and nice title of you post. We have an in-house definition when quoting:

WEB REPORT = converting numbers into graphs

WEB ANALYSIS = converting www into $$$

As for me reporting and analytics is 2 first steps of user behavior optimization process.

Reporting provide the data for analytics, analytics predicts possible ways to achieve site goals. And then again and again. Each step useless without other: with bad reports analytic recommendations will be wrong, weak implementation lead to stats decrease etc.

So compare this concepts is the same as compare table and chicken. :)

Thanks Avinash, this is really helpful.

Much appreciated!

Analysis is subjective: at its best, reveals connections, strengths, weaknesses otherwise unknown. Reporting compiles.

As a very successful CEO once said to me "Talk to me like I am a three year old"…I never forgot that :)

Regarding the difference between reporting and analysis, I'd argue that they are complimentary to each other:

Reporting = The important groundwork/research which supports the analysis

Analysis = Insight and the resulting action

Like anything, without the hardwork, there is never any pay off. Without mining the right data, the ability to draw the vital insights and actions is often impossible.

Gill, that's a great way of saying it i.e. that they are complimentary to each other.

Let me be radical and disagree

Language can be crazy and labels are applied and stick. We teach "Consumer Behaviour" but it goes beyond consumers (into B2B) and beyond behaviour (into attitudes etc). In the same way WEB ANALYTICS has become an overall label for all these activities.

In my opinion

Web Analytics = the process involved

Data display = what you call web reports

Reporting = the action taken/suggested after making the analysis

Another point!

There are different levels at which a report can be written. At the first level, it can simply relate facts, or reproduce them. The second level will involve making some interpretation of these facts and some recommendations. A third level will go beyond the data, possibly by incorporating knowledge found elsewhere, and will speculate or make predictions about what a future state may be.

This third level is true consultancy, where the researcher is almost acting as a decision-making partner. This level of reporting requires confidence and intuition

Another great post Avinash. Context is key in creating actionable web analysis reports. Another important component in web analysis is organization of data.

Neeraj: Perhaps that is a key distinction. Is your job to give someone data who can then interpret it and find insights and make decisions, or is it your job to provide the decisions data supports.

The latter is analysis and the former is reporting. We need both in life, but we should not confuse one with the other. :)

Adam: Thanks for adding this to the thread, it is a wonderful way of framing the difference.

Nigel: I appreciate your opinion Nick, thank you for adding your thoughts to this thread. I would disagree with your definition of Reporting, but I am happy that your focus is also on analysis.

To your second comment… I love the idea of thinking of ourselves as a "decision making partner".

-Avinash.

While I don't disagree with you, the tone of the post seems to be underplaying the role of web reporting vis a vis the analysis. Remember that without the reporting and the data in the first place, you would not have any analysis.

Also many times, good reporting creates new insights and spurs analysts to think along a new line that they haven't thought before.

What organizations need therefore is good web reporting followed by good analysis.

Have you ever read/watched Mindwalk?

It talks about how we try to separate things in small pieces, like engines of a clock in order to understand it. But, in most cases, things are better seen as if they are connected to other things, not like they are made of small things.

This post reminded me of that. We make things very complex, dissecating every part of them, but sometimes the answer is seen if you take a step back and look at the big picture. =D

Great Post :).

The value of web analyst is in fact in deriving such insights

Thank you for the post, insightful as always. What I'm looking for as a beginner, is an excel template of such a dashboard that I can plug in data from google analytics, instantly create actionable data that can be presented to the company.

Does anyone here know of such a template / tool available?

I recently described Analysis and Reporting as similar to Love and Marriage.

Very often, a "piece of work" will start as analysis, and slowly move into reporting: You find out something exciting, make recommendations, measure the impact – all is well and seen through rosy spectacles.

People get interested in it, they want to know about it – the Love story carries on.

And then, after a while, things start not being so fresh anymore, but we carry on by strength of habit, and one struggles to fill the comment box next to the chart that was once lovely.

My view on that is that there are ways to make reporting exciting again – in a way, and to carry on with the marriage theme, you can spice up your reporting!

My way is to make it topical (not all the metrics make it everytime – I pick the charts that actually show something) and comment heavily, so that my stakeholders can get to the juicy bits within minutes (I didn't dare go with seconds, but that is the idea).

The other thing, is that Analysts will live and die by their ability to influence, and reporting is an important tool for that, as it is a point of contact with stakeholders – so not neglecting it and putting some care into it often pays.

I guess the reason why there is a fair bit of disagreement around, is that reporting put together by ninjas is pretty different from the squirrel friendly variety…

Also, Daniel Shaw, I love your comment :D.

I recommend to you Omniture Site Catalyst, which comes out of the box with a variety of pre-built actionnable reports and segments, which will allow your business stakeholders to make informed decisions.

Hi there.

I find this article very important. Thanks for posting it.

A very good explanation about the difference between web reporting and web analysis.

@ Daniel Shaw

You can actually export data from GA to excel quite easily. Have you tried?

You forgot to say another main difference between analysis and reporting: time spent! :-)

Giving the right recommendation needs time.

Good Job Avinash. I think is important to have KPI to evaluate the strategies. The data alone is not enough to get know the user's trend, but a little dive metric without KPI is also important to get know new trends

Web Analysis is translating Web Reporting into an action plan. It's turning "WTF?" into "What to do next" and may also offer some insight into "How to do it better next time".

Analysis is also making it explicit as to what is opinion, based on incomplete data, and "statistical truth" as told by the data – and how to validate the analysis with a test/experiment. It is very easy to misinterpret or over-interpret the data.

The analyst should be able to draw the lines in the right place, and show where the lines end, and what that means, but more importantly, what it dosen't mean.

Wow – thank you for this. Now if everyone I know can read this, it would be fantastic!

I've seen 100s of monthly reports that will make a client's eyes glaze over. Anyone can build a spreadsheet full of numbers. Telling what the numbers and trends mean is where the value is.

Great post!

I asked myself today (actually just minutes ago before visiting your blog… seriously) what I've 'actually' accomplished with all my data analysis.

At the end of the day, I realize 90% of it is junk and maybe 10% is useful. Give me a campaigns and keywords report and keep the rest. :) Why do I stare endlessly at unsegmented visits, pageviews, etc.?? So what!, you would say :). I need to break the habit and focus on what matters.

Thanks as always for your expert insight.

Ravi: You are right, the overwhelming emphasis of the post is to stress the value of analysis. My believe is that we are an egregiously high amount of reporting in our world already (and much of it needs to go away!).

I had assumed that people who are smart enough to focus on doing analysis will know that you do that by leveraging efficient reports. But perhaps, per your comment, I should not have made that assumption.

Marcelo: I have not read/watched Mindwalk, but I will add it to my list. Anything that encourages a focus on the big picture is an automatic favorite of mine! :)

Daniel: In the small chance that you have Web Analytics 2.0 please look in the CD that is on the back cover. It has a couple of different sample dashboards that are web analytics specific (with sparklines and space for graphs and numbers and insights in words!) that you can adapt to your own use.

In case you are using Google Analytics then check out the various applications that come with built in dashboards for you to use, a list is here: Reporting Applications – Google Analytics.

Nextanalyitcs, and Excellent Analytics both might fit. There are others as well.

[In context of this post I must hasten to point out that this will just give you better reporting, you'll still have to figure out how to convert it into analysis!]

Chris: I love your analogy of love and marriage, thank you so much for sharing it with us. Making things "spicy" by "trying other things than what has become routine" will work in either case! : )

Giulio: Good point. Perhaps this is another nuance….

Reporting: Fast, painless, low impact.

Analysis: Less fast, painful, high impact.

:)

Salvatore: Ha ha!

I like your comment: Analysis is turning "WTF" to "what to do next"

-Avinash.

Thanks for dissecting the complicated process of analytics.

This provides lots of insights about how to better present my findings while simultaneously driving real business outcomes.

Muchos gracius or something like that!

I recently did a presentation on web analytics, in my organization, where I asked the audience how many of them receive emails from our group with pretty graphs and charts, that they don't know what to do with.

A bunch of hands among the 90+ audience went up, but to my embarassment, a senior manager's hand went up too!!

Yes – We do our best to separate analysis from reporting but it is soooo harrrd :)

One more interesting thing that you pointed out is how some "commentary" repeats what the graphs are saying, except in words – Avinash – you don't miss anything! :)

Hi Avinash,

Thanks for the great post. In one of the dashboards you have showcased above on SEO, you mentioned "the estimated gain of 1.5 smile donations".

Could you explain how to calculate this estimate gain through SEO? Unlike SEM, in SEO we can't have jump id tagged to each link.

Thanks!

Pei hoon

P H G: We are all able to track SEO separately from PPC (if our paid search campaigns are tagged correctly). From there it is possible to measure the impact of current SEO efforts.

The Analyst in this case made several recommendations to make search engine optimization improvements which would yield in additional traffic. This can be estimated on past performance (made x changes, got y increases in traffic or conversions) or based on competitive intelligence (or based on gut feel!).

In this case the Analyst said if you make the changes I am suggesting then it would result in "1.5 smile donations" increase. (Smile donations is a proxy for outcomes).

You can't tag SEO links, but for keyword or page improvements you make you can still measure success.

-Avinash.

I'm hardly qualified to make strong comments on this topic, but it strikes me that much of the 'web reporting' that I see lacks the basic principle of the scientific method; test and report.

I so often see suggestions to change XXX or update YYY or shift $$$ to alternative ad base ZZZ, but in my experience these 'web reporting' documents are largely useless unless they are revisited later to evaluate the effectiveness of the suggested strategy.

Worse, they hardly ever discuss bucket testing potential strategies and evaluating and choosing the most effective results.

Why is that?

From a management point of view, limitation of liability in the event of strategy failure and confidence intervals are surely extremely important?

Personally, I would refuse to call anything that has a clear plan to evaluate the outcomes of its actions web reporting.

I would add that an web-analytics team should not be outsourced. I believe it is most important to have internal knowhow for selecting, rating and evaluating the right agencies, services and tools – and for success/performance measurement. The analytics team must be fully aware of the business strategy and deeply involved in all defined actions. Knowhow like SEO, paid search/advertising, usability, mobile, social media … is necessary for optimization, not only for analyzing any outcome.

If someone believes that "analytics can be outsourced", this service is very likely to become reporting, imho.

@matthias I disagree. Many businesses cannot afford to hire a full time analyst, never mind have a team with all the skills required to implement and manage a successful conversion rate optimisation process – the right mix of web design, SEO, marketing, and data analysis also vary throughout the implementation of project, across campaigns, and through technology changes.

Outsourcing these tasks makes the same sense as outsourcing accounting, payroll or any other business support service that are not an integral part of the core business value offering. Some companies are better off without the baggage, others seem to prefer the internal control – this is a constantly changing fashion in the IT industry as a whole, and even within different departments of large organisations.

However, as a consultant who project manages the implementation of appropriate in house optimisation processes, I am obviously biased – however my bias is based on real world experience. I have done work for companies who thought they had all of the requisite skills in house, until they read my analysis – they had become so accustomed to reading reports, they didn't know what they didn't know. This is a huge part of the problem – the unconscious incompetence of many job titled analysts. Alas, I once was one myself, regaling my employers with reports, that they could not interpret, and I did not interpret for them.

The gap was in the the understanding. I thought I was giving them information. They thought I was giving them a pretty picture with some interesting shapes. As long as the chart seemed to be going up, they felt they should be happy, even if they didn't really know why it wasn't making them richer. The bigger the organization, the deeper the unconscious incompetence, misinterpretation and miscommunication. I am sure you are not like the younger me.

In my experience, outsourcing to get a real analyst, with a completely fresh perspective and no internal politics to colour his or her advice, can turn the business around, and prevent years of misdirected, flavour of the month website redesigns. A good consultant will not just have analytical skills, but should also be able to communicate the business needs to the IT/web design team/individual/supplier as well as interpret the data for the business.

The Analysis will be complete if we add the following metrics like Target and the Percentage-of Actual value over the Target. Also, we can do a comparison with the Previous month as well as the Previous Year.

For Example, if we are reporting the Visits of a particular month,If we compare with the Target that was set and comparison Analysis with the premonth and Same month but the previous year, It will leads To Many Questions and there by many Recommendations/Action Items.

Avinash – the blog starting to seem like work with the volume of responses?

;-)

There is a lot in the thread that flows away from and back to, the original subject (includes me). That being said, there are many comments about the depth and density of reported information. At the risk of being trite, this would be the simplified arithmetic:

Web Reporting + Insight = Web Analysis

and one for insight:

Analytics data + Context = Insight

More arithmetic since I'm rolling:

1 picture : 1,000 words

1 Conversation : 1,000 reports

Fun with arithmetic!

Doug: You are well qualified! : )

Your observation is apt. But the job of "reporting" is simply, to put it a bit crudely, data regurgitation. Go grab as much of it as possible (the more the better to impress the bosses / clients that you are doing "work") and then toss it over the fence for someone else to analyze and, as you say, recommend potential strategies (hopefully via testing) to choose optimal outcomes.

My hope this this post was to lay bare that difference so that all of us who work on digital analytics can be honest with ourselves.

Matthias: I humbly don't think it is quite that cut and dry.

I think this exist on a spectrum: Web Analysis: In-house or Out-sourced or Something Else?

In the post above I made clear that in the long run having majority of web analysis in-house should be the goal. But equally important is to outsource the "bleeding edge" so that you can constantly move fast and adapt quickly. I've bucketed things into four stages, please see the post.

Gary: I love it!

Thank you for putting this into an equation we all can understand (especially in this industry!!).

-Avinash.

Avinash,

Love the post & your advice.

I absolutely agree with you that CONTEXT is the king (or queen if you are from the Commonwealth) and that along with ACTIONABLE INSIGHTS is what it takes to shift from reporting to analysis.

Also, a tool can never spew out analysis – it can deliver any number of reports. If a company really wants good analysis done then they have to hire the right PEOPLE.

And while I agree completely that analysis is about actionable insights, I also think (just my opinion) the analyst's job does not end with the analysis. The analyst should properly communicate those insights and work/follow-up with the relevant stakeholders to ensure action is being taken on the analysis. Analysis that ends with a presentation is only as good as reporting.

Regards,

Ned

Avinash, awesome article.

We focus on providing data-driven insights to our clients, based on web and enterprise data, and I feel that you have captured the essence of what I think every employee in my company should know.

@avinash and @Salvatore: Sorry for the confusion I created.

I should have pointed out that I see the _quality control_ inhouse, and as long this is connected/related to success measurement I see this also as a part of WA. WA services can be (partly/fully) outsourced like many other services, depending on how the business is organized and can utilize it the best way.

Also, a webanalyst is not necessarily a perfect SEO/SEA/usability-expert in parallel. But a webanalyst knows all that and can rate a service by its need, costs and efforts, and outcome.

I have experienced when contractors controlled their self without the requester having a chance to rate it due to a lack of resources or knowhow. This can become expensive, and worst case results in a nearsighted conclusion like "that doesn't help us, skip it".

I've also experienced opinions where webanalytics was seen as simple reporting. Based on that, one agency was reporting SEO efforts, another one SEA activities, a third one reported the web traffic, etc. But no one had the overview AND the skillset to control it as it should be. This is where I see a central (if not inhouse) department which controls all of it AND is fully aware of the business goals.

Does this make sense? Maybe my mind is too much caught by the complex structures I experience(d) in my little universe..

@Matthias – First, I'm completely biased. I've spent the last 6 months building a web analytics service offering (yes, analytics not reporting) for the agency I work for. I completely disagree with you re: Outsourcing.

I understand your point, you're concerned that an outsourced team will act like any number of other agency's and just deliver the minimum level of crap that a client will accept. I think that outsourcing, while carrying that risk, contains other benefits which outweigh it for many companies including:

– Separation from internal politics.

– (Relatively) cheap access to expertise which you just don't have in house.

– Objectivity (well, objective about different things, everyone is subjective in one way or another).

– Access to solutions, methodologies and processes from a number of different companies and tools.

– Better industry access.

– Established procedures.

– Access to a team of professional analysts (and thus multiple opinions on the data) rather than just the person you hire in house for the same spend (with a single opinion on the data).

This isn't even taking into consideration the difference in capability. I'd bet 10:1 that a reputable outsourced analyst agency will be far better at discovering issues and recommending solutions than the vast majority of internal analysts.

You make a valid point about the risk, but I don't consider a controllable & easily actionable (if it gets to reporting, sack them!) risk to be a reason to ignore all the potential benefits outsourced analysis brings to the table. Besides, isn't it just as likely that your internal analyst is going to revert to reporting at some point?

Great piece, as always, Avinash. But I'm pretty sure your list could have stopped right at #1. If it ain't actionable, it ain't analysis.

Avinash, I agree with your delineation between reporting and analyzing. I would like to add a point– tailor your analysis to the intended audience.

A member of senior management might say, "I don't want numbers, I want a sound bite." That means they get 3 bullets outlining the problem with support (a very simple stat) and 3 bullets with proposed solutions. For the exact same analysis though, you will deliver a higher level of support when talking to someone on your team or someone in IT because they want more detail about what is going on.

You have to talk to the people that you are delivering your analysis to and figure out what is important to them in an analysis. When every person gets an analysis that speaks to them, the recommended actions from the insight are more likely to be taken.

One of the greatest challenges is selling the value of the analysis, when asked simply for a report. I think this article provides some great fuel for that argument.

I am planning to digest your "Data Driven Boss" article over lunch and see what other juicy bits are there.

Thank you ~ great job!

Hi Avinash, in my opinion the difference is in the amount of profit it generates, I guess that's why "we identify when we see it" because it has caused some reaction :-)

Some charts may not need explanations to make me react, because they contain everything to show what I need to know. Similarly, a large text may not show me insights.

Without going into the quality of analysis I think that to speak of analysis is necessary to have used a method. The method may require context, segmentation, … but a working method, not only moving data and graphics from one place to another. Neither analysis would consider rewriting what is already in the data, I would call this "Convert Table to Text" which is a function that is already in excel (joke).

Sometimes we can get lost, so analysis needs to be connected to business goals and KPIs, which is part of the method.

In my opinion the quality of analysis is proportional to the amount of profit it generates. Therefore, the analysis is complete at the time that allows us to prioritize actions.

Then, for me the difference is in the method, we reports don't have it.

Jaume

Alyson: Excellent point on analyzing for the intended audience.

I don't know though if you need to dial down the analysis. I suspect it is a matter of how much context you have or how much thinking the other person wants to do. Let me share an example.

A IT Guy/Gal does not want more "detail", they just think they have more context and if you give them a data puke that they'll have enough context and skills to look through it and make the decisions they want. In this case you and I will give them a login and password to the system :).

A front line Search Marketer might know a lot more than you or I about the intricacies of PPC and so they don't want our analysis (as we don't have skills or context) and they just want data. We'll set them up to get a customized "data puke", they can take it from there.

Both of these are very valid use cases where we do web reporting, as we can call it web reporting. We don't have to all it web analysis, and roses will still bloom in the world! :)

Jaume: You've shared a wonderful perspective. Sometimes self-evident data is key (especially if the recipient – boss, HiPPO etc – have all the context and skills needed to analyze the data and find insights).

But the key, always, as you say, is to structure our analytical efforts. Focus on business priorities and deliver analysis against business goals / bottom line. Hence my absolute love for frameworks like the Web Analytics Measurement Models!

-Avinash

Your article highlights the difference between data collection and simple summary statistics, (e.g mean, mode, range, frequency, etc.) and analysis. The US Army in Army Pam 71-1 Table 6-5, Levels of Data, lists seven levels of data, from raw data (level 1) to extended analysis (level 6), the seventh level 'evaluation or conclusion' is based on the first six AND applied judgment.

This seventh level requires not only the analysis of the data, but also adds the "so what?"; as in "So it is significant, what does it mean to me or how can I use this information?"

Evaluation is perhaps the hardest to do, since it requires not only an understanding of the 'numbers' but also an understanding of the context – the user and the system and it's employment.

I was (is) not sufficient to say this is significant, one MUST also say why it is significant, and then what does it mean to the user. Significant results without meaning have no value, except perhaps to the statistician.

I agree with most of the comments here on the subject.

Reporting is representation of data in a readable form (list, charts etc). This can be churned out by reporting engines. I consider google analytics as another reporting mechanism.

Analysis is a human process of interpretation of represented data. So it is the meaning part of what data shows. As you mentioned, this activity involves reasoning and action to be taken by the user.

Avinash, once again your post like we would say in French "se passe de tout commentaire".

I think Web Analysis should give you a good idea of how the future would look like IF…(insert actions).

A lot of great points have been highlighted by Avinash and other contributors.

Sadly, over here in North America too many executives are seduced by pretty graphs/charts with no patience to read in depth analysis and recommendations. This is particularly true of top marketing executives; the very people that need to embrace analysis. In marketing it’s all about the “shiny objects” and celebrating successful campaigns.

The constant quest for that one metric that shows success is the real killer of analysis in marketing teams e.g. “1,000,000 people visited our site vs. 900,000 in 2010, because we increased spend on Search by xx% or launched new tools on our site”.

How many of the new visitors became customers as a result of the marketing efforts? Marketing is not only about brand awareness and engagement.

BTW – I’m a digital marketer myself; just not impressed with the mindset of some of our colleagues.

The demand is for “fisher- priced”, one page dashboard with metrics disguised as KPIs.

Good news: Web analysis is becoming accepted with increasing pressure to justify marketing spend.

Henceforth, we hereby banish discussions about impressions and visits :) Amen!

Why is it so hard to ask a client what they want and put it on paper?

We get the answer and display the data in an easily understood way with both quantitative and qualitative insight. It's about pleasing them, not the HiPPO. I find this to be my biggest challenge as a mid-level analyst.

Craig: I humbly think it is a function of the fact that most of the times the clients don't know what they want (and they ask for sub optimal stuff because of legacy reasons) and because most "Analysts" in the web analytics industry are essentially "Implementers" (creators of Javascript hacks and evars and custom variables and all that).

That perfect storm causes the issue you are pointing to.

I find an insane obsession with outcomes and and business analysis will help alleviate this issue. See the tips here: Six Rules for Creating a Data Driven Boss

Good luck Craig!

Yinka: I think you'll love this blog post: Online Marketing Still A Faith Based Initiative. Why? What's The Fix?

And I love this sentence in your comment: "The demand is for “fisher- priced”, one page dashboard with metrics disguised as KPIs."

Thanks!

Avinash.

In my opinion good analysis means you have to take account of wider consequences, not only the data itself. So you have to know sufficiently the company, market segment or industry you are doing analysis for.

We encounter such situation quite frequently while implementing our Business Intelligence BellaDati for our customers in eg. mining or insurance industry. Usually customers operate large databases, prepare a lot of reports regularly, but do not have clear idea, how to interpret these data by their top management. Moreover, the market is very turbulent nowadays, which means management needs the most actual data as soon as possible to make right strategic decisions. Therefore our BI solution does not serve only as data visualization tool, but also includes data warehouse, data cleaning utility and analysis tool with agile cooperation supported by dynamic comments.

Great article on web analysis. I have a better grasp of the differences between web analysis and web reporting.

Great article as always, Avinash.

I've considered this difference before. It's prompted me to look at not only what reporting and analysing look like (and the differences between them), but also what's necessary from a website perspective to do reporting, forecasting, analysing and testing.

For example, how easy (or difficult) is it to carry out analysis on a site if it doesn't change? How important is testing to analysis, and are the two dependent on each other? My post is at

davechessgames.blogspot.com/2011/05/web-analytics-reporting-analysing.html and all comments are welcomed!

You hit the nail squarely on the head with this post. There are countless companies hyping themselves up for presenting mountains of data in real-time. As someone that runs a company in the analytics space, I can say that's not the hard part and not what people really "need".

Figuring out what all the data mean and presenting it in a way that actually helps people understand it and use it effectively is much, much harder.

Great post.

I think i have the bones to be an analyst.

And your post makes me want to kick my SEO guy's butt. Because they are doing 'reporting' and not 'analysis'. And we pay them a lot. Gee.

Good Article! This helps us to prepare report of client projects.

I may be oversimplifying but a report is what the data generates, analysis is what we do to the report in order to prove/disprove a concept or idea.

It's an important difference, one is merely generated, the other is interpreted.

Avinash,

Great article. I completely agree with you that most of the time, Graphs and Dashboards are more reporting rather than providing any analysis or recommendations that can be truly actionable for the audience. I just want to point out that this is a noble effort (getting to recommendations) but quite difficult to do in most situations.

Now, I'll be the first to admit that I'm not a Web Analytics expert (that's why I bought your book)….I come from a more traditional Business Intelligence and Analytics background where the data doesn't come from consumer clicks. So when I read some of your rules to differentiate what is Reporting and Analysis from a non-Web Analytics context, I found some of them don't apply very well.

Now, I know this is patently unfair and not the primary purpose of your blog, but if you'll indulge me, just wanted to give you a different flavor of the challenges Analysts face:

For example:

Rule #1: You see actions for the business to take

The examples you've highlighted and the accompanying recommendations seem to show a fairly controlled environment and where the causal relationships seem simpler. In the non Web Analytics world, I can tell you that just understanding why the trend line shows a blip or is going down is a mini-project in itself as we are dealing with multiple variables. The best I can do is put forward some theories or hypotheses (after ruling out some reasons), but I've found that the business leader I'm presenting to – usually has a much better grasp of the context to interpret the data trends and take the action needed.

So, I'd suggest to you that while Recommendations are a noble goal everyone should strive for, it pre-supposes that you know more about the business (not the data) than the audience your are presenting to. Now, that has been true in my consulting engagements, but even then it takes a long time to collect the data, summarize the trends, understand the business context and come up with hypotheses, test these and recommend a course of action for the decision maker. In short, getting to a recommendation takes a lot of time and involves more than data analysis. In most cases, if you manage to highlight and interpret what the data is telling you – that is considered a major win in itself.

Rules #2, 8.: Economic Value, Impact of actions

It's easier to talk about cost of the actions, but very difficult to quantify the economic value of many business actions in the non-web analytics world. Heck, ask any of the BI/Analytics software vendors or their consumers to quantify the value (ROI) they have been able to obtain and you'll get productivity savings easily but rarely anything more. Value quantification itself is a science…I think that's why they make you pass 10 exams in Advanced Statistics etc before they make you an Actuary and let you calculate the economic value of your pinkie or big toe. I'm not an Actuary, but I got a good appreciation of how difficult this after spending 3 months trying to quantify the economic impact of scheduling downtime in a global setting (and use it to develop a recommendation engine).

Lastly Rule #9: 3 or more metrics in a table

This I don't quite understand. As you reiterate, every KPI or metric needs to be tied to a desired business outcome, so if the values for these metrics didn't come from some automatic process or tool (Rule 12 – love it), I'd still consider it analysis. You'd be amazed at how many Fortune 50 companies I've found that have trouble just understanding or calculating a "simple" metric like customer profitability (requires LOTS of analysis and you'll still get an iffy answer).

In summary:

Those are the only Rules that I found less than universal – all of your points and all the remaining Rules are quite useful and should inspire any analyst to go the extra mile.

PS:

Sorry about the length. Also, I got the link to the article from LinkedIn I think and immediately started reading…didn't notice until now that I'm a year late to the discussion.

Prakash: Please accept my deepest thanks for sharing your feedback.

Some thoughts on your feedback….

I have to admit that I very deeply passionately believe that you can't be a modern Analyst if you don't know a huge ton about the business. I reject the world I started in where the Analyst's primary role was to stick to data (and sadly data puking). I know that we are very good at data and queries and merging and reporting. But that is no longer sufficient.

You are right that the business leader will have context that the Analyst might not have. We aim to move beyond simply reporting data/analysis, we aim to present a cluster of solutions/hypotheses based on our best business knowledge. We can then seek executive input (and their strategic context) to prioritize actions.

It is difficult to quantify economic value. I would hypothesize that it is actually even harder in the digital world because unlike in a factory, supermarket, call center, shipping catalogs with digital we are trying to solve for so many more outcomes. But that is why the impact is bigger if you invest in it. So we go into it with open eyes. And if our client does not want to invest in it, if our company does not want to prioritize it, then we know that there will be a lower limit to success we can drive. :)

Even if it is a year late I very much appreciate your feedback. Most of my early experience was in the traditional BI/DW world and it is always so much fun to tie the digital to the traditional!

-Avinash.

Chuck nailed it in his comments on April 4, 2011, with "If you can automate it, it probably isn't analysis."

I recently shied away from a "web analysis Insights" job where the hiring manager kept asking about my SQL chops. OK, I can design star schema. (Even thought I invented it — like 50,000 other guys.) But I don't build an RDBMS until I know what the questions are — because once a data structure is locked in, it can only answer "how to better do what we are already doing," no matter how you query it.

OLAP *may* be able to find something new. ANNs are worth a try. But real analysis is about journeys into the "known unknown" or even the "unknown unknown" — and that you do by looking at clickstreams, at huge patterns in vast spreadsheets, throwing your mind out a thousand miles to touch a mind unlike your own, and trying to understand what they are thinking at both the conscious and subconscious level.

Real analysis happens staring at raw data, and then staring at clouds or waves to think about it. I might venture that no real analysis was ever completed in a cubicle, and no real analyst ever asked someone to do analysis in a cubicle.

Odd that an *A*vinash should be espousing journeys into vinash.

Nicholas: This is a wonderful comment on so many levels, thanks for contributing your perspective.

And bonus points on the A*vinash bit. :)

-Avinash.

Analytics Analysis is a skill I could be better at… given enough time, I'll get there :-)

That's why we hire a team of analytic experts and their main job is digging and mining :-D