There are lots of different tools, many of which measure the same thing, numbers don't tie, there is confusion and a lack of trust and…. well I am getting ahead of the myself.

There are lots of different tools, many of which measure the same thing, numbers don't tie, there is confusion and a lack of trust and…. well I am getting ahead of the myself.

The story starts with a email asking for thoughts on a issue a lot of us face on a daily basis.

Why don't I let the original author tell the story……..

Can I ask you a question? I'm fighting mightily to make HBX able to measure marketing channels—pay per click, organic, email, banner ads, and onsite merchandising. But individual channel managers simply do not trust the data.

I think that data overall is damn accurate—total conversions by product is within 10% of in house measurement. I have a sneaking suspicion that web analytics tools are NOT the best measurers of true conversion by channel.

The data never matches what they get from their pay per click tool, their email tool etc. They blame the web analytics tool, when I think it's just that they are expecting something that can't be done.

Have you had any experience with this phenomenon and do you have any advice on how to dive in and tackle to diagnose?

This is a tough problem. Very real, very tough.

[Before we get too deep I wanted to highlight that this is as much a data problem as it is a organization problem.

[Before we get too deep I wanted to highlight that this is as much a data problem as it is a organization problem.

For now Web team is usually in a silo, then Acquisition tends to be in a different silo and further more it is not uncommon for different "Channels" to sit in their own homes that may or may not work / collaborate with each other. The corrosiveness that organization structure can cause is often underestimated.

Data gets blamed and worse any possible positive outcomes don't, well, come out. I wanted stress this important element.]

So what to do?

Perhaps the most important thing to realize in this case is that your web analytics tool is often not measuring the same thing as your offsite tools are (for example: ppc, banners, affiliates etc). Even success (conversion) is not measured the same way.

Examples of issues causing differences:

# 1 : Often many "off site" tools measure impressions (completely missing from web analytics tools) and clicks. For the latter if you code correctly then clicks come through to your web analytics tool but remember that there you are usually measuring "visits" and "visitors" and not clicks.

# 2 : A good example is conversion. A ppc / banner / affiliate cookie is usually persistent and will "claim" sales even after ten visits of the customer, including for scenarios where the customer might have came back repeatedly through different channels (first paid then organic then banner than paid again then affiliate then….. you get the idea).

Most web analytics tools will credit the first channel or the last one creating one more bone of contention with "off site" tools.

[As a bonus in this scenario, #2, your paid search vendor and your banner vendor and your affiliate vendor all "claim" credit for conversion even though it was just one customer that converted!!]

My approach at solving is really quite simple. Five easy to execute steps:

Step One: Understand what each tool is actually measuring and how it is doing it. This means really getting down and dirty with the data and the vendors and pushing them hard to explain to you exactly what they do. If you are not a smidgen tech savvy then take your friendly IT Guy with you.

Step Two: Document. Create a slide / email outlining all the reasons why the numbers don't tie. Be intelligent, be creative. Here are two slides I had created from a long long time ago, a real blast from the past….

The slide above provided a summary of why the two data sources were providing numbers that were 30% off. As you can imagine took a lot of work.

[Click on image for higher resolution version!]

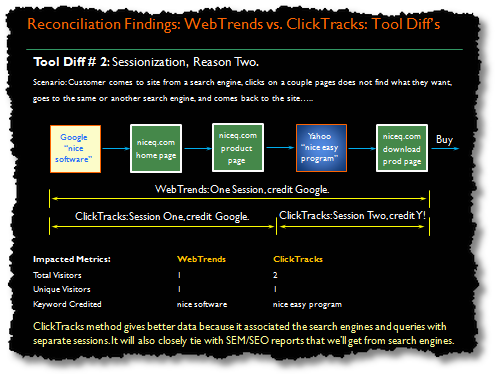

This slide explained one of of bullet items (1.II.b), how each tool dealt with the sessionization when it came to search engines (and with increasing dominance of search engines this turns out was a big problem)

Notice the "pretty picture", it shows a very complex process with great clarity.

Step Three: Educate your users (in this case the data skeptics). Do dog and pony shows. Present to the senior management teams, or anyone who will touch the data. Leave them easy to refer to presents such as the slides above. Make sure that your audience is now smarter than you are because that will build trust (both in the data sources and, more importantly, in you).

Step Four: Start to report some high level trends between the tools, while doing your best to hide/remove the absolutes from any report / dashboard. Especially in comparisons one month won't be good enough and it might even be distracting.

Often it is not uncommon for me to index the numbers them in some way and compare trends, rather than show the raw numbers . So xxx campaign / program went up in comparison to others by xx points (or xx percent) etc. To take the focus away from numbers.

It will take a while for them to get comfortable with the numbers, depending on the organization complexity, politics and how good a job you have done in Step Three.

Step Five: Pick your "poison".

This is a key step. You need to wean your organization from relying on multiple tools for the same data. The goal is not to kill different tools (unless they are utterly redundant) but rather not to have the same Metric from different tools.

You need to pick the best tool for the best metric.

It is ok to let your Acquisition team use DoubleClick / Atlas / Whatever to report end to end on your banner / display / digital media campaigns (make sure they are following customers through conversion).

Ditto for your Pay Per Click (PPC) / Search Engine Marketing (SEM) team. If you are big then your agency probably has lots more data in its own possession than you could have, and they probably want to move faster than you could. Don't compete with them.

Some web analytics tools do promise that you can bring in any data and merge it with your web analytics tool and it will work wonderfully. Sometimes it does, often it does not.

Just pick your poison, best cleanest possible source of data for each job and use it.

If you are the Web Analytics person should you just quit and go home? No.

Focus on data that you have from your web analytics tool that they (other tools / teams) don't have.

For example: What is happening on the site after these skeptics deliver Visitors to your site? Use site overlay, bounce rate, content value, testing, all things they can't do, don't have access to but in the end help them understand what is going on on the site and how they, skeptics, can make more money from your data.

Most siloed tools (PPC, Banners, Affiliates… etc) don't can't actually do deep site analytics, so forget the tactical, they will do that better, focus on the strategic. Rather than become combative be "collaborative".

You do that and you have your hook! No one will argue with you about differences in data.

In summary: While this is a tough problem that we all face, it is possible to bring about some sanity to the existence of multiple data sources. It is not possible for now to just have one tool for all your needs, but if you follow the above five step process then you'll be able to bring out the best in each and benefit from it.

Ok now its your turn!

Please share your perspectives, critique, additions, subtractions, bouquets and brickbats via comments. Thank you.

[Like this post? For more posts like this please click here, if it might be of interest please check out my book: Web Analytics: An Hour A Day.]

Via

Via

Hi Avinash, Great post as always. You have given a solution for the problem which is unsolvable by every means.

Along these lines, a problem that I have had in the past – providing web analytics consultancy for clients – is trying to collaborate effectively with other teams eg search team.

For example, if Adwords has more conversions than I am recording on the analytics solutions – one of the reasons being the sessionisation you so clearly highlighted – the search/PPC team will not want to present the lower numbers to the client and it is quite tough to get them to understand the concept of sessionisation or other variables. Thus the web analyst role I feel has to become more of a machiavellian creature of politics, influence and buy-in to get the big picture across internally and to clients.

Thanks for a great post Avinash,

Web Analytics Princess http://www.marianina.com

There is not one word in this article I disagree with. ;o)

Avinash,

Another great post. Agree 100% with all your comments. Rolling your sleeves and making your hands dirty is key. Another element is to understand rules, filters and adjustments that are used in the implementation. Using an excel chart to "bridge the gap" is very powerful.

End of the day, this data does not go on the "books" and is in context. As long as it is in acceptable variance and trends correctly, some decisions can be made – This should not be an excuse to stop looking at data and avoid data driven decisions. Ofcourse, if your data is way off – it's time to make your hands dirty.

-Rahul

Great post Avinash,

For us one of the key education points for clients has been that, as you say, its okay to use (for instance) Google Adwords tracking to optimise the Google Adwords campaign, but to understand the overall cost of each channel in comparison, the central WA tool is everything. This has been especially true of affiliate marketing where we see the biggest gap between the affiliate measurement tool and the actual CPA values vs other channels (or is this just me?).

In addition i've always found people very open to the "double counting" argument, as you mentioned, the one where the sum of all the parts are greater than the part itself. This has always been the one that makes people completely understand the "difference" between the central tool and other ways of measurement.

-Mike

Good stuff. I have found that the process of reconciling measurements from different tools just isn't worth the time and effort. Though we do need to monitor the differences. The variances I have dealt with have not been significant enough to affect ROI decisions. We just need to educate the players on why differences exist.

Mike P : Excellent point! Often in a sea of multiple tools the only apples-to-apples comparison between tools can only happen via whatever web analytics tool you have becuase it will do computations equally wrong or right for each channel.

The only requirement being that the various channels be tagged with trackable parameters / cookie values. Do that and you are set.

Steve : Great sense of humor my friend! Thank you.

-Avinash.

For the first time ever I understand what sessionization means! Maybe that sounds really basic. As a Marketer I am love this blog because you make web analytics accessible.

I have been using my Agency's tool for my affiliate tracking, now I have a blueprint for how I can best work with my in house analytics team.

Well said, Avinash! The one area that you left a bit open-ended is what happens *after* you drill down a bit with the skeptics as to what is causing the differences. What we would all HOPE would happen is that we would have truly educated the skeptics as to how our WA solution works as opposed to how their tool(s) of choice work, giving them a deeper, richer understanding of how multiple versions of the truth don't necessarily mean a liar is present.

More often, what I've found is that this deeper explanation — even when presented as cleanly and clearly as possible — winds up with eyes glazing over. Not entirely a bad thing, as these skeptics often have a valid takeaway: "This stuff is complicated, but that guy not only knows his tool inside and out, he knows more about what's going on under the hood in *my* tool than I do. I think it's best to stop beating him up and start recognizing him as a valuable resource for me."

To be clear — the LAST thing you want as a *goal* for one of these "explanation" presentations is to simply show how smart you are and MAKE their eyes glaze over. That's a good way to damage the relationship by coming off as a pompous ass. But, if you honestly make the effort to make the details comprehensible and have that as your singular goal, even if you don't achieve THAT goal, you may still win a strong ally!

This article literally made my day! Thank You!

Interesting read, Avinash.

For anyone looking for a free way to show heatmaps to potential skeptics (they tend to like sexy visualizations) check out Crazy Egg. It's a great place to start playing around with heatmapping and overlays. Their new 'confetti' option works pretty well too.

It's free up to 5,000 visits so it's a good place to get started and can provide enough visits for a decent conclusion.

Excellent post Avinash.

One point in particular that's interesting is that "silo's" inside an organization seem to be the crux of these problems in almost every area of IT. Be it Content Management, Web Analytics, Product Design etc.

Unless we are able to change the way of functioning inside an organization, issues like these of having "different perspectives" of the "same thing" will continue to be an problem.

Really great post,Avinash.

I am writing to get help from you, in the problem of how to calculate the unique visitor?

Just assume that we are in a retail web site,we may use email_id or something like that to identify the unique visitor.But if I want to analyze the correlation between age and product consume, and I think this method is going to break down. For example, I use this email_id to login while my father also uses this id to login to pursue different product. And we are in the same personal information while actually we are in different age; this definitely makes the result inaccurate.Any suggestion? Thanks.

Seven :

The way Unique Visitor is identified usually is by the use of a anonymous cookie that is placed on a computer. This is a text file that usually does not contain any personal information.

In as much it is very difficult to tie things down to a person.

Some companies will capture and store PII (Personally Identifiable Information) in the cookies or url stems but this is a practice that is usually not looked upon approvingly (both by practitioners and customers).

At the moment capturing and showing demographic information is lots of guess work (there are third parties that can provide you this information). But I want to stress that purely knowing someone is Male or Female is usually of marginal value (in terms of your ability to then take that data and find insights and turn them around into action).

There is a lot more behavioral data you can, and do, capture in your web analytics tool that can be greatly actionable (and more than demographic). Additionally understanding micro-segments of your customers (campaigns, sources, organic / ppc, new vs returning, content consumed etc etc) are super actionable. I would recommend chasing those (and perhaps you are already on that path!).

Hope this helps.

-Avinash.

Thank you very much for your answer.

It may be really hard to indentify the unique visitor(cookie is a way to indentify this but certainly it can not represent the exact unique visitor as you said),I think that we shouldn't be restricted in the metrics, what we should do is to pay more attention to the customer orientation.All the metrics that can lead us into this intent are good metrics.

By the way, really happy to read your articles, it helps me to enlarge my knowledge.I hope i can learn more from this. Thank you.

Stumbled across this article today while reading up on conversion metrics. I just dealt with this at work comparing numbers between Google Adwords and our new webanalytics tool. The Adwords numbers are much higher than the analytics tool, and I was pretty much blamed for not being vigilant enough about the numbers–because the difference was deemed more than acceptable and there is too much double counting going on. But the more I read on this the more I'm convinced the double counting is good because all marketing items work together to create a customer. Of course, that does make it hard to determine which marketing effort does attribute what amount of the conversions. How does one reconcile these two things?

Typicalwebgirl: First it is important to realize that most of the time your AdWords number will be higher than your Omniture/Google Analytics/WebTrends number. They do attribution in very different ways.

Some of the reasons mentioned here would apply in your case as well:

Why do AdWords and Analytics show different figures in my reports?

My buddy John and I had done a short series of videos that goes much deeper into this. You'll find it here:

Standard Metrics Revisited: #5 : Conversion / ROI Attribution

Hope this helps.

-Avinash.